This essay has two parts. Part One is about the problems with using the words we use to describe human cognition to talk about LLMs and why Wittgenstein’s term “language games” is useful when thinking about natural language processing.

The two parts can be read in any order, but the chef recommends starting with Part One. If you like what you read…

Imitation games as a proto-paradigm

In Computing Machinery and Intelligence, Alan Turing argued that when we consider the question, “Can machines think?” we shouldn’t start with what the words machine or think mean.

The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous. If the meaning of the words "machine" and "think" are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, "Can machines think?" is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.

The replacement Turing offered is the imitation game, a version of what became known as the Turing Test. The game explicitly rules out considering how context changes the meanings of key terms. Don’t ask, “What does thinking mean when it happens in a machine?” Ask instead, “How do machines perform when playing games with humans?” Turing’s test helped establish the field of artificial intelligence in that it provided what

calls a proto-paradigm, meaning a way of doing things: “bundles of assumptions and practices that get handed down and spread around.” So, not a full-blown paradigm, just a way to get to work on an interesting problem without the messy effort of establishing fully developed models and definitions for what you’re trying to accomplish.One reason for this proto-paradigm’s success is that Turing didn’t have to work very hard to get people to imagine computers acting like humans. That framework was already in place before Turing’s influential articles and the famous summer workshop at Dartmouth.

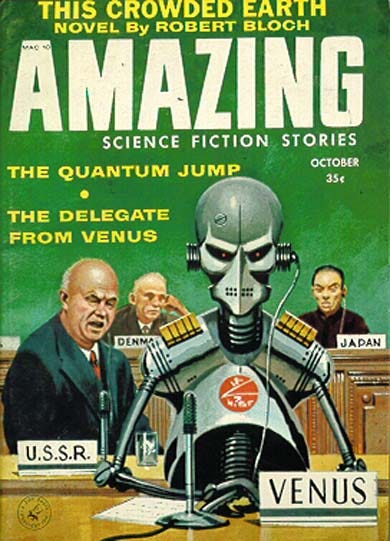

Amazing stories of the wonderful and the weird published in mass periodicals during the early twentieth century updated ancient tales of golems and mechanical monsters using the language of science, especially electrical engineering and physics. Science fiction and the field of artificial intelligence grew together as a symbiotic cultural development. Turing’s game was preceded, and possibly inspired, by a well-known short story published in Wonder Stories in 1934 about the challenge of evaluating the language of an alien intelligence. Turing himself wrote an unpublished short story in the genre in the 1950s. In the 1960s, Phillip K. Dick wrote a novel built around the dramatic tension of playing the imitation game with killer robots, which inspired the 1980s movie Blade Runner.

From Metropolis (1927) to I Robot (1939) to 2001 (1968) to Terminator (1984), science fiction has shaped our understanding of thinking machines before, during, and after artificial intelligence researchers started to build them. The commotion about Her (2013) and Sky, a voice created for GPT-4o, is only the most recent example of how deeply intertwined the history of our cultural imagination of talking machines is with our attempts to build them.

The science-fiction writer Charlie Stross has a particularly good essay on the downsides of this connection, arguing that using entertainment set in the future as a roadmap to build and understand technology is a bad idea. As he puts it, “The future is a marketing tool.” Artificial Intelligence as a field of study has used this fact to attract funding from the earliest conferences to the latest start-ups. When ChatGPT became a surprise hit, it was easy enough for OpenAI and its competitors to sell generative AI to customers and investors using the language of the future supplied by science fiction. Sam Altman and OpenAI have been very effective at playing it straight in official announcements—we’re just engineers building cool stuff with boring technical names like generative pre-trained transformers—while intensifying the speculative hype through social media and comments to the press.

The transformer-based models themselves seem to be in on the game. Their datasets contain massive amounts of cultural information, so they slip easily into roles shaped by the narratives of novels and films. Think of Kevin Roose’s famous conversation with Bing playing the weirdly jealous and obsessive lover, or the many stories being written about people falling for chatbots as if they were characters in Ex Machina or Her. We can scold people for anthropomorphizing machines—and I do—but the truth is, our new talking machines are really good at pretending to be characters in familiar stories. The results are delightful and disturbing. Talking to one is more interesting than trying to understand how it works or get it to do something useful for you.

nails the situation: “the core A.I. product is the experience of talking to a computer that talks back.”Treating LLMs like humans or characters playing thinking machines from sci-fi is fun. Turning that experience into something that is both a game and a science experiment was a brilliant move by a thinker who, as

writes, was “an immersive and experiential worker.” Computing Machinery and Intelligence was a thought experiment, a story about how computation and cognition might be related. As argues, “Turing didn’t expect this imitation game to be implemented as an objective test of ‘intelligence’ for computers,” but that has not stopped many people from treating it as such.To update the Upton Sinclair line: It is difficult to get people to understand something when their salary and stock options depend upon their not understanding it.

The imitation game provided a bundle of assumptions and practices based on an experimental definition of thinking. It organized the scientific work of understanding computational technologies by building them and then comparing their outputs to those of humans. For a long while, the outputs were quite easy to distinguish. Scientists built digital computers that could match patterns and solve problems, but none of them could mimic human language all that well. Although, thanks to Eliza, we learned that it doesn’t take much conversational depth for humans to really enjoy talking to a computer that talks back.

Seventy-two years later, the language outputs are indistinguishable, and the fascination with talking to talking machines has intensified. Transformer-based models now play language games so well that many say they pass, some say broke, the Turing Test. We are left with entertaining tools that feel like they are from the future but have surpassed the benchmark we used to build them.

Artificial Intelligence is post-proto-paradigmatic

With the imitation game dislodged, there is little clarity on a framework for the effort to develop better language models. Trying to build general intelligence only works if we can agree on a definition of general intelligence or at least come up with a new benchmark. While there are ardent believers in the g factor who would say that giving an LLM an IQ test tells us all we need to know, artificial general intelligence (AGI) gives every appearance to be the twenty-first century’s version of luminiferous aether, the entirely theoretical substance that many scientists in the nineteenth century believed was what allowed light to move.1 General intelligence suggests a goal, but unlike the imitation game, it offers no clear benchmark.

However inadequate as a scientific theory, AGI is an excellent marketing concept right out of the playbook Stross described. We can easily imagine AGI because we know so many stories about sentient machines. The lack of specificity when it comes to general intelligence does not seem to worry the enthusiasts. Understanding is not necessarily the goal for those selling LLMs or making bets in equity markets on generative AI. To update the Upton Sinclair line: It is difficult to get people to understand something when their stock options and investments depend upon their not understanding it.

While the headlines are filled with the latest OpenAI shenanigans and overconfident predictions about the disruptions soon to be wrought by LLMs, those of us looking to understand transformer-based language models are working within the remnants of a broken proto-paradigm. Researchers continue to try to understand computational processes by comparing them to human cognition, even though the measures they use don’t explain much. When ChatGPT “passes” the Uniform Bar Exam and the United States Medical Licensing Examination, everyone knows that this does not mean transformer-based language models can practice law or medicine. When Gemini scores 90% on the Massive Multitask Language Understanding test, it doesn’t mean the model understands the contexts for the words it generates. Using computational processes to answer questions on a language test designed for humans echoes Turing’s Test, but the scores don’t tell us anything we don’t already know from reading an essay generated by ChatGPT.

Those essays and other cultural outputs from transformer-based language models are uncannily good. However, there is more to experience than the language we use to describe it. An LLM’s data is limited to words and cultural artifacts. Yet, many imagine LLMs will develop the capacity to act in the human social world in ways that exceed human capacity. That somehow, they will become the characters we know from science fiction. Exactly how transformer-based language models go from producing weird and sometimes useful outputs to self-directed, human-level cognition is not clear, but when enthusiasts talk about AGI, they seem to think some combination of giving them robot bodies, training them to solve new types of problems, and a better capacity to form digital models of their environment will spark consciousness.

Psychology has an explanation for why consciousness exists, but it doesn’t fit LLMs

Williams James was the first writer to describe consciousness as a stream, using the metaphor to imagine cognitive processes as continuously flowing like water through a landscape. He was also among the first to describe, using scientific language, the full range of human and animal cognitive processes within a Darwinian framework. That is, he described cognitive processes as serving evolutionary functions; thinking arose because it made survival more likely for animals that think. Intelligence is best understood in terms of fostering capabilities that are effective in helping organisms adapt to natural environments.

According to James, “consciousness is at all times primarily a selecting agency.” He means not only that the process of natural selection selects for animals that have it but also that the function of consciousness is to select what an animal should notice in its environment in order to survive. Consciousness determines what an animal should pay attention to. Such attention “is always in close connection with some interest felt by consciousness to be paramount at the time.”

James believed most talk about consciousness was just metaphysical speculation, but in arguing for its origin in the development of life, he was intervening in the discourse around the new science of psychology in much the same way Turing did with artificial intelligence or Einstein with physics. James was arguing for a new basis for the study of the human mind. Cognitive processes described by terms like attention or intelligence were understood in the context of consciousness. As James put it succinctly, “Cognition is a function of consciousness.” Thinking is a mechanism that functions so that animals adapt to their environment. Science may not have learned much more about consciousness since James wrote about it, but if you buy the theory of evolution, it follows that one thing we know is that consciousness arose through natural selection.

This functional explanation for cognition does not apply to human-built LLMs. Without a more specific scientific theory of how consciousness would arise, we have no basis to compare what happens inside human brains to what happens inside LLMs. LLMs are purpose-built tools designed by humans. They did not develop through the process of adaptation to a natural environment. This difference helps explain why using the vocabulary of human cognition to describe information processing in LLMs is so confusing.2

As James says, “The universal conscious fact is not 'feelings and thoughts exist,' but 'I think' and 'I feel.'“

Turing’s proto-paradigm bypassed the problem of different contexts by removing context from the experiment. Blindly comparing the outputs of machines and humans pushed questions about the relationship between cognition and computation outside the framework, including questions about how either relates to consciousness. The fact that consciousness is not well-defined or measurable did not matter. Now, it does, but only because so many seem to assume that AGI is the only framework for continuing to investigate and develop LLMs. There are alternatives.

It may seem counterintuitive to look to the late nineteenth century for theories to support twenty-first-century scientific inquiry, but evolution is still the best explanatory framework for how cognitive processes developed. We still use the conceptual framework laid out in Principles of Psychology (1890). When he wrote it, James was both a bench scientist doing path-breaking experiments on the brain and an accomplished writer of philosophical essays about consciousness. The difference between empirical investigation and metaphysical speculation was important to James even as he did both. Public understanding of LLMs would greatly benefit if more writers acknowledged the difference.

In the preface to Principles, he defines what he means by psychology by opposing it to metaphysics.

Psychology, the science of finite individual minds, assumes as its data (1) thoughts and feelings, and (2) a physical world in time and space with which they coexist and which (3) they know. Of course these data themselves are discussable; but the discussion of them (as of other elements) is called metaphysics and falls outside the province of this book…All attempts to explain our phenomenally given thoughts as products of deeper-lying entities (whether the latter be named 'Soul,' 'Transcendental Ego,' 'Ideas,' or 'Elementary Units of Consciousness') are metaphysical.

Experience, not language or computation, was James’s primary subject as both a psychologist and a philosopher. In the decades following his death in 1910, much of psychology turned to computation as a way to understand the mind, leaving qualitative methods and theories to smaller subfields with less scientific status. At the same time, academic philosophy appeared to split between those interested in formal logic and those interested in language and culture.3 James wrote before those internal disciplinary divisions emerged and before data became more or less synonymous with numbers, so it is important to note that, for James, subjective experiences are observable data.

James’s conceptual framework in Principles focuses on data drawn from observing behaviors, including his own, of living organisms acting in the universe. His #3 is complex and subtle. After all, one weird thing about knowing your own thoughts and feelings, what

calls the intrinsic perspective, is that while I know I am conscious, the only evidence I have that you are conscious is speculative.As James says, “The universal conscious fact is not 'feelings and thoughts exist,' but 'I think' and 'I feel.'“ We believe other humans are conscious based primarily on self-knowledge. There are correlations between these self-reports and other observable data, but the best evidence that other humans are conscious is that you describe your subjective experiences, and I recognize, in your words, something of my own subjectivity.

Reports from LLMs about their subjective experience make for interesting news articles but do not trigger the same recognition, except perhaps through the suspension of disbelief we call the Eliza Effect. We know that human brains and LLMs are structurally different. We know that when an LLM generates language about thoughts and feelings, it is based on probabilities arrived at computationally, not expressions based on a stream of experience.

LLMs are a cultural technology

If the imitation game has outlived its usefulness as an experimental benchmark, if general intelligence is just a marketing tool, and if speculation about consciousness in machines is a scientific dead end, what possible basis is there for developing a conceptual framework to understand transformer-based language models? What would a new framework look like?

The answer starts with keeping in mind James’s distinction between explaining data and speculating about deeper-lying entities. Exploring how LLMs work through observation and experimentation is better than giving them cognitive tests designed for humans and saying “Wow!” at the results. Anthropic’s recent experiment is a good example of useful research. It involved manipulating Claude 3 Sonnet’s word vectors, which is how an LLM mathematically represents and manipulates natural language, and observing what changed.

explains what the researchers found here. Neither the report's authors nor Lee sensationalize the results by talking about similarities with human neural networks or speculating about what the explanations suggest about consciousness.Of course, a bunch of observations carefully described is not enough. We need a larger framework to make sense of this and other research into LLMs. What besides consciousness is there? The answer I find more convincing every day is that transformer-based language models are a new type of technology for manipulating language and cultural artifacts. Reading Lee’s account of how scientists transformed “Claude’s otherwise inscrutable numeric representation of words into a combination of “features,” many of which can be understood by human beings” provides insight into how an LLM’s computational processes arrive at surprisingly useful cultural outputs.

In other words, think of generative AI as a form of human-built, collective computational intelligence ready to support humans as they go about solving social problems.

Transformer-based language models are best understood as a significant addition to our existing educational and cultural technology set. This new technology’s predecessors are not electricity or fire but writing and the printing press. It is built on a shared capacity for language, but that fact does not require us to imagine that capacity involves machines thinking the way humans do. Consider generative AI as a complicated set of tools for accessing and manipulating the data organized on the internet. Alison Gopnik appears to be the first to suggest this approach. Here is her take on the idea. This essay by Henry Farrell and this blog post by Cosma Shalizi also provide good introductions.

Thinking of LLMs as a cultural technology frames scientific investigation on questions about how computational processes work without getting distracted by similarities to the human mind or fantasies of conscious machines. It focuses attention on the social impacts and uses of the technology, amplifying some of the important questions skeptics are asking while expanding the limited vision of LLMs as universal assistants or personalized tutors.

The real potential here is not in agents who will do mundane knowledge work or sentient machines who will watch over us with loving grace but in what Shalizi calls collective cognition, which I understand as a kind of mash-up between what Ethan Mollick likes to call co-intelligence and what Brad Delong likes to call anthology intelligence. In other words, think of generative AI as a form of human-built, collective computational intelligence ready to support humans as they go about solving social problems.

Along with worrying about their social effects and being careful because their outputs are based on probabilities rather than experience, we should approach these tools as an opportunity to reform schools, hospitals, and jails, as well as the bureaucratic systems of education, medicine, and criminal justice that manage those institutions. And sure, businesses and markets, too. But when thinking about business, especially the technology companies building and selling these tools, we should ignore the science-fiction dreams used to market them. Instead, let’s focus on their potential to remake democratic society for the better.

I’m primarily interested in educational bureaucracies in the age of generative AI and in questions about how nineteenth-century writers like James and other early pragmatists can help us think more clearly about social problems in the context of new cultural technologies. But that’s just my angle on this.

What’s yours? And how does thinking about transformer-based language models as a cultural technology instead of a step on the road to AGI change it?

This was Part Two. Here is Part One of On the Langauge of LLMs.

Know someone with an angle on using AI to solve social or educational problems?

Learn more about AI Log here.

𝑨𝑰 𝑳𝒐𝒈 © 2024 by Rob Nelson is licensed under CC BY-SA 4.0.

I do not believe I am the first person to make this analogy. I would be most grateful to any reader who points me to where I may have heard someone make this connection….I suspect a podcast.

Computationalist theories of cognition assume that brain activity is computational, or maybe, that computational models of what we think brains might be doing explain cognitive processes in humans. These assumptions make a big deal out of the fact that neural networks in language models and human brains are superficially similar. This line of thinking generates a great deal of philosophical speculation and some lovely-looking mathematical models of brain activity, but so far, it has done little to advance our understanding of cognition and next to nothing about consciousness.

Like most simple stories about the history of ideas, the division between “analytic” and “continental” philosophy leaves out a great deal. For example, pragmatism is an active philosophical tradition on both sides of the Atlantic and the islands between. It does not fit comfortably in this narrative.

Many thanks for the much needed critical analysis of the language and conceptual frameworks that are being bandied about to describe LLMs. There really should be a clearer distinction between human cognition and the “computational intelligence” of LLMs.

As you point out, the terminological appropriation of human cognitive terms like “attention” and “intelligence” obscure things – especially when not even the engineers really know what is going on with these LLM computations. Hopefully, more work like Anthropic’s will shed more light on how word vectors affect LLM output. We need a more transparent understanding of the workings of these black boxes.

But on at least the superficial level of language production, there are still conceptual similarities between how humans generate much of Realtime speech and language and how LLMs generate text. There are clear parallels: both are basically associative/sequential probabilistic processes based on weighted frequencies. But there are crucial differences. I am working on a paper exploring how this doesn’t capture how humans write and think more deeply and “creatively” about things, which depends on more on hierarchical and iterative processing. I am with Yan LeCun on these limitations of LLMs and how far away they are from human cognition.

Unfortunately, I think we are a long way from even understanding human cognition and consciousness. Yet I am optimistic that generative AI advances and more transparent/explainable AI will help refine our understanding of what human consciousness and cognition are and what they are not.