Ethan Mollick says anthropomorphizing AI is a sin of necessity. Repent! I say.

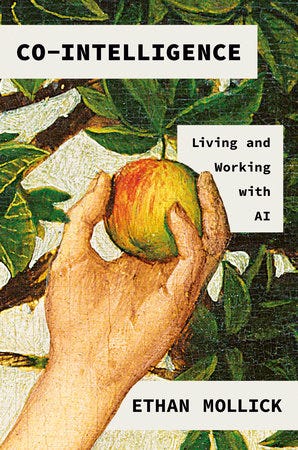

A review of Co-Intelligence

In the year following the release of ChatGPT, thousands of LinkedIn profiles were updated with phrases like AI Innovator, AI Founder, AI Consultant, AI Keynote Speaker, or even just deeply curious about generative AI. It seemed everyone had something to say about AI and many of them took to LinkedIn to tout their expertise and willingness to get paid to explain what was happening to the bewildered and anxious. Ethan Mollick's profile read simply “Associate Professor at The Wharton School.” Mollick didn't need to proclaim his expertise; he performed it, prolifically, posting frequently, often three or four times a day, about an experience with ChatGPT or a research paper he’d run across. Plus, he wrote a weekly essay on his blog, One Useful Thing, which became every thinking person’s essential reading about AI.

A few months ago, he updated his profile adding, “Author of Co-Intelligence, out April 2!” The exclamation point is unlike him. Compared to most of those writing about AI, Mollick's style is understated and direct, presenting generative AI as a subject for genuine inquiry, not a reason to issue proclamations. This approach makes his writing valuable, even when he says something you don’t agree with.

Although it rehashes the best of his blog, One Useful Thing, and his examples and stories are mostly familiar to those following AI news for the past year, his new book weaves this material effectively with Mollick’s experiments with AI. Co-Intelligence pulls it all together, distilling his advice into four rules, giving brief overviews of everything from the history of chatbots to the development of transformers (the “T” in GPT) to the homework apocalypse, and offering insights like challenging those promoting prompt engineering as an ‘essential’ skill for the AI workplace. His take is that skill at crafting prompts is useful only in the short term, saying AI “will only get better at guiding us, rather than requiring us to guide it. Prompting is not going to be that important for that much longer.”

Although he is no simple-minded techno-optimist, Mollick mostly walks on the sunny side of the AI street. “Always invite AI to the table” is Rule #1. Many examples in the book are drawn from his work as a teacher, and what he teaches is creating new products for the market and more effective organizational management. Like Sal Kahn of Kahn Academy, Mollick sees the future of AI-powered personalized learning as transformative and fast-approaching. “Assume this is the worst AI you will ever use” is Rule #4. If you are looking to overthrow the system or wrestling with the contradictions of drawing a paycheck from the neo-liberal university-industrial complex, Co-Intelligence is not likely to speak to you. However, if you want a deeply knowledgeable tour of the fast-shifting border between human and machine capabilities this is the guidebook you need. Mollick calls this border the jagged frontier:

Imagine a fortress wall, with some towers and battlements jutting out into the countryside, while others fold back towards the center of the castle. That wall is the capability of AI, and the farther from the center, the harder the task. Everything inside the wall can be done by the AI, everything outside is hard for the AI to do. The problem is that the wall is invisible, so some tasks that might logically seem to be the same distance away from the center, and therefore equally difficult – say, writing a sonnet and an exactly 50 word poem – are actually on different sides of the wall.

The jagged frontier is a weird place, and Mollick makes it intelligible without minimizing its fundamental weirdness. In showcasing his use of ChatGPT and other tools, he demonstrates how "trying to convince ourselves that AI is normal software" will only interfere with discovering what these tools are good for. Mollick's preferred metaphors for this discovery come from magic and myth. In his best essays, Mollick constructs an AI future filled with centaurs and grimoires (spellbooks), ending not in catastrophe but eucatastrophe, JRR Tolkien's term for an optimistic outcome reversing what appears to be certain doom.

Despite a facility with magical images and terminology born of years playing Dungeon and Dragons (I'm just guessing here, but take a look at the games on the shelf behind him), Mollick remains clear-eyed about the machine nature of these tools. As he puts it in the essay Magic for English Majors, "AI isn't magic, of course, but what this weirdness practically means is that these new tools, which are trained on vast swathes of humanity's cultural heritage, can often best be wielded by people who have a knowledge of that heritage." As a comparative literature major and history teacher, I find this encouraging. It is exactly the sort of thing those of us who care about the humanities, or at least care to keep getting paid to teach it, want to hear. More important, it points to an underrated aspect of these tools.

As Alison Gopnik argues in this short talk, generative AI is a cultural technology, one that is vexing because it is so easy to ask it to retrieve relevant information. From punch cards to typing code on keyboards, using computers has always required specialized skills and knowledge. That is no longer true. This powerful technology with access to the raw data of nearly all of human digital culture can now be used simply by asking questions using everyday language. You don’t even have to use English, as foundation models have shown surprising abilities to translate from other languages to their preferred, or native language, English.

Did you see what I did there? I anthropomorphized foundation models1 by referring to their preferences and suggesting that a foundation model has a native or first language the way humans do. That’s not at all how these machines work. They are weird and complicated, but we know they are not processing information like humans do. They are not sentient or conscious. Mollick argues that even though this is obviously true, we should treat foundational models as if they were people. In fact, “treat AI like a person.” is Rule #3.

Co-Intelligence answers Ted Chiang’s question from last May’s Will A.I. Become the New McKinsey? with a hopeful “no,” at least in the short term. Mollick’s hopes rest on how generative AI will be adopted.

This is where Mollick differs from more skeptical writers like Emily Bender, Ted Chiang, and Timnit Gebru who urge us to avoid thinking of an AI’s information processing in human terms. A foundation model does not write or speak, according to Bender, it “extrudes text.” These critics are not simply being fussy about terminology. They see the constant anthropomorphizing and speculative excitement about foundation models as an intentional smokescreen for exploitation and extraction. They are deeply worried about the monopoly and monopsony power of giant technology companies, how automation of knowledge work may displace workers, and the ways sexism, racism, and colonialism are embedded deeply in the models’ data and reflected in the outputs of AI machines. Mollick, a former consultant at Mercer and a professor at one of the best-known business schools in the world is on the other side of many of the battlelines in the discourse. And yet, Co-Intelligence often reads like a guide to staging interventions with techno-optimistic, bottom-line-obsessed managers shaking their heads sadly at the prospect of laying off half their workforce or technology executives wondering why higher education institutions haven’t already been disrupted out of existence.

Co-Intelligence answers Ted Chiang’s question from last May’s Will A.I. Become the New McKinsey? with a hopeful “no,” at least in the short term. Mollick’s hopes rest on how generative AI will be adopted. Firms that simply reduce headcount anticipating productivity increases will severely damage their ability to take advantage of what generative AI offers. Augmentation not automation will be how adoption proceeds so firms that “commit to maintaining their workforce will likely have partners who are happy to teach others about the uses of AI at work, rather than scared workers who hide their AI for fear of being replaced.” Learning how to use AI will not come from centralized processes imposing controls, but from workers’ experimenting and sharing their results. The longer-term future of work is less certain but Mollick suggests “the potential for a more radical reconfiguration of work, where AI acts as a great leveler, turning everyone into an excellent worker.”

For those hopeful that some combination of governmental regulation, consumer activism, and critical AI literacy may be enough to mitigate the worst social dangers of generative AI, Mollick’s short-term optimism and emphasis on human agency can be compelling. Lawmakers and tech billionaires won't make the most important decisions about using AI. Collective choices made by middle managers and classroom teachers will matter more. He writes, “There is no outside authority. We have agency over what happens next, for good or bad.” Of course, we all want the good. Distinguishing it from the bad is the tricky part. And Rule #3 is bad.

The question of whether anthropomorphizing machines is okay or not is perhaps the most visible of the battles raging between those excitedly speaking of AI disruption and those urging caution. In his first post after Co-Intelligence was released, Mollick explains why he has chosen to “stop writing that an “AI ‘thinks’ something” and instead just write that “AI thinks something." The missing quotation marks may seem like a subtle distinction, but it is an important one.” He points to this essay by Gary Marcus and Sasha Luccioni as an example of experts arguing against the anthropomorphizing habits of writers and technologists. Their advice about foundation models is “Treat them as fun toys, if you like, but don’t treat them as friends.”

Of course, human co-workers are often unreliable and weird too, but in ways that are familiar. Generative AI is confusing because it is so different from earlier forms of technology.

Some of the worry comes from what researchers call automation bias, the tendency for people to accept guidance from machines less critically than they should, even when presented with explanations that should call the outputs into question. Some of the studies of this phenomenon involve flight simulations used to train airline pilots. They show why, despite the sophistication and reliability of autopilot technology, we still use human co-pilots in commercial airlines. Humans learn to be careless when given machine assistants. They substitute the machine’s judgment for their own, even in situations when following the machine’s direction will crash the plane. Mollick’s research on consultants using AI shows that this sort of learned carelessness accounts for tasks where knowledge workers without AI help do better than those working with AI. He explains that in such situations “AI made it likelier that the consultants fell asleep at the wheel and made big errors when it counted. They misunderstood the shape of the Jagged Frontier.”

Mollick’s counter to this problem boils down to developing a framework to “divide tasks into categories that are more or less suitable for AI disruption.” Sure, but shouldn’t we find ways to ensure users interrupt their habit of uncritically accepting AI outputs regardless of the category? That would be the point of Rule #2, “be the human in the loop.” It seems likely that learned carelessness will be common when working with AI. Yet, Mollick sees value in treating AIs as humans due to Rule #3’s practical value in helping people adjust their expectations and habits of mind.

I’m proposing a pragmatic approach: treat AI as if it were human because, in many ways, it behaves like one. This mindset can significantly improve your understanding of how and when to use AI in a practical, if not technical, sense.

In other words, the inefficiency of initially learning to use AI is a problem and so pragmatically we are better off telling workers and students to treat their new speaking tools as if they were people. After reviewing the problems and risks with anthropomorphizing foundation models, he writes “working with AI is easiest if you think of it like an alien person rather than a human-built machine.” This is what I call ordinary pragmatism. It focuses on the immediate value of the framework without considering the larger social context. Is the inefficiency of learning a new approach a bigger problem than automation bias in the long run? That seems unlikely. Mollick’s short-term thinking makes Rule #3 frustratingly wrong. Frustrating because his metaphors of magic and the examples of games and simulations offer a much better way to approach generative AI, one that could help make users more careful even as it encourages creativity.

I’ve played my share of Dungeons and Dragons. A frequent element of such games is non-player characters or NPCs. These are game elements designed to add complexity and fun. They act through the agency of a gamemaster (in tabletop games) or a computer program (in video games). They follow rules or guidelines laid down beforehand but their motivations, indeed their very nature, are not fully understood by the players. They may help players on their quest or goals, or hinder them, or both. Frequently, they appear to want to do one thing, and then it is revealed that they are doing the opposite. NPCs are a fun way to introduce the unexpected into a game or activity that might otherwise be a bit boringly predictable. They are also a much better way to think about foundation models, as Mollick shows. His actual experiments sound more like a dungeon master constructing NPCs than a manager introducing new employees.

My favorite example is the hilarious set of characters he created to help him with the often lonely and frustrating work of writing and editing a book. Ozymandias, Mnemosyne, and Steve provided feedback on a draft of his chapter on “AI as a Coworker.” Mollick writes that these helpers are examples of how “AI can simulate being a human being.” But these characters are human-like only to the extent that they process inputs and produce outputs using natural language. Their actual abilities are narrower, and in some ways deeper, than those of a human editor or first reader. More important, Mollick does not treat them as co-workers or fact-checkers. Rather, as their allusive names suggest, Ozymandias and Mnemosyne use their distinct perspectives and voices as demigods to suggest edits and interesting connections or questions. Steve’s job is to simulate “a normal human reader of popular science and business books” so his suggestions, like his name, run to the mundane. Mollick’s description of their work together sounds like the premise for a television show set in a writer’s room for a comedy about AI. I’m not sure how useful it would be as a writing process, but I’d watch that pilot.

In fact, enlivening our imaginations as we carefully explore how we should use this technology is the necessity here, not anthropomorphizing it.

I recently wrote about imagining educational bureaucracies as dungeons that need to be explored, mapped, and rebuilt. Retrofitting bureaucratic dungeons to be more democratic and recentering them to focus on human needs is slow and frustrating work. Enlisting these sorts of agentic AI tools built on foundation models in a quest to reform our schools and workplaces sounds like a lot more fun than a staff retreat to consider incremental improvements to business processes. Mollickian metaphors and character-based NPC assistants offer a way to enchant our efforts at reform with play while building social awareness of AI’s limitations and biases. Instead of introducing these tools as human-like interns who will do the boring work until they get good enough to replace us, maybe we should consider how they might enlarge our collective human capabilities while fully acknowledging how unreliable and weird they are.

Of course, human co-workers are often unreliable and weird too, but in ways that are familiar. Generative AI is confusing because it is so different from earlier forms of technology. The question of how to help users as they navigate this confusing transition to machines that simulate conversation is important, but the surprising cultural capabilities of foundation models are no reason to treat them like they are people. In fact, it is important that we not let the ease of communicating with them blind us to how alien they are. Embracing the weirdness and fun of AI through the framework of magic may seem silly, but my point is that incorporating these tools into our workplaces and schools need not be boring. In fact, enlivening our imaginations as we carefully explore how we should use this technology is the necessity here, not anthropomorphizing it.

Mollick concludes his final chapter “AI as our Future” by arguing “Correctly used, AI can create local eucatastrophes, where previously tedious or useless work becomes productive and empowering.” That is a plausible and compelling future, one that could provide knowledge workers and teachers the means to change their workplaces for the better. Getting to that future will require acts of imagination and creativity that treat generative AI as a cultural technology. Mollick gives us language to perform such acts but also gives some bad advice. We are better off treating this new technology as a new kind of technology, and not as a new kind of person.

I said that Mollick’s view on the short-term value of treating AIs like people is an example of “ordinary pragmatism.” In On Techno-Pragmatism, The Radicalism of William James, and in essays soon to be posted here, I explore what pragmatism in the philosophical sense means for understanding generative AI.

Learn more about AI Log here.

𝑨𝑰 𝑳𝒐𝒈 © 2024 by Rob Nelson is licensed under CC BY-SA 4.0.

I use the term foundation model instead of large language model (LLM), which is the term Mollick uses. In most cases, these are synonyms. I use foundation models because these pre-trained models incorporate other types of cultural artifacts such as images and music, not just language. They are also the “foundation” for other tools or functions within other tools.

Well done, Rob. Your reading is consistent with mine. After Mollick opens the door to anthropomorphization, the middle section of the text contains a long series of dangers about overreliance--a process arguably facilitated by anthropomorphization. That constellation of ideas seems pretty clear to me. I find your argumentation very effective where you analyze what Mollick is actually doing when he is creating his own copywriting entities. Well done!!! I'd love to see some work being done on how we resist anthropomorphization in our writing and our classrooms. If you are looking for a place to explore such ideas, consider my Substack as a possible platform with a somewhat larger built-in audience.

Excellent. That sounds like a good approach. Focusing on specific words with a theorist in tow. And who else? I am imagining a James Does AI book down the line. 😋