This is Part One of two. The two parts can be read in any order, but if you’re here for William James, you’ll find him mostly in Part Two.

Language works against our perception of the truth. —William James

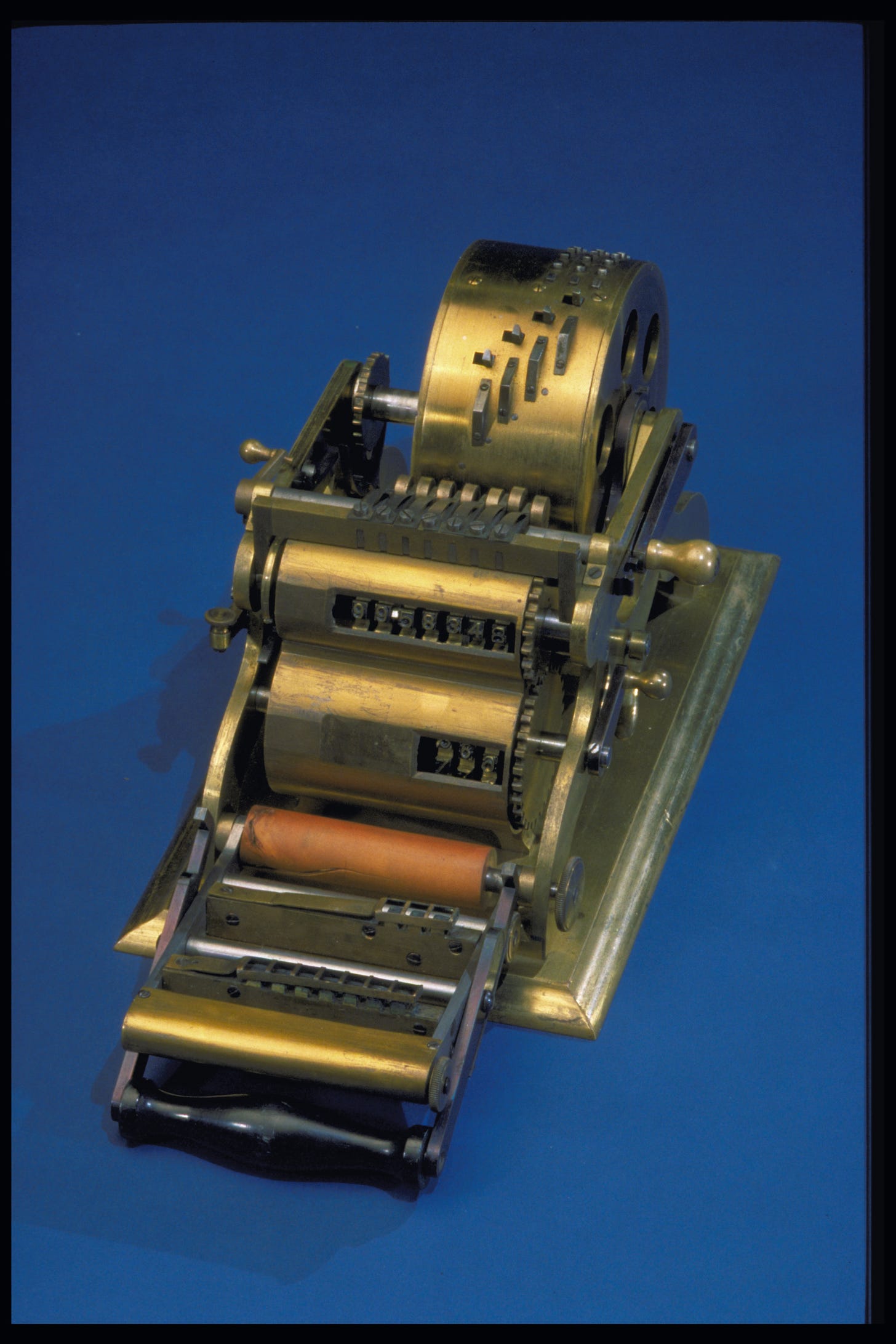

No one believes this calculating machine is intelligent. It solves problems that a human must formulate and input. It doesn’t think. That’s true of most machines we call computers and the programs that run on them. AlphaGo is a purpose-built program that plays Go. There is more to its processes than arithmetic, so you could call AlphaGo’s process thinking in the sense that it uses well-defined processes to reason and make decisions. But it is probably more accurate to call this computation. AlphaGo thinks only in a very narrow range. Outside the context of Go, it is not intelligent.

The question of whether a Large Language Model (LLM) is intelligent is trickier to answer. Its computational processes have much greater range and power than AlphaGo. It uses language, which researchers call “natural language” to distinguish it from machine or programming languages, and it has a neural network with layers not unlike those of the human brain. An LLM may not have the range and power of a human, but because its computational processes use natural language, it mimics humans in ways that can seem intelligent.

Skeptics like Alicia Bankhofer, Emily Bender, Ted Chiang, Sarah Luccioni, and Gary Marcus urge us to keep the differences between computational processes in machines and cognition in humans firmly in mind. They argue against anthropomorphizing transformer-based models. LLMs do not speak or write. Instead, as Bender likes to say, they “extrude text.” She and other critics believe treating LLMs as if they are thinking machines encourages a general misunderstanding of what they are.

Ethan Mollick sees benefits in acting as though machines think. He says that the skeptics “ask important questions” such as “How does treating AI like a person cloud our views of how they work, who controls them, and how we should relate to them?” Yet Mollick and other enthusiasts urge us to treat LLMs like people even though, as he puts it, they “don’t have a consciousness, emotions, a sense of self, or physical sensations.” He titled his book Co-Intelligence to describe how transformer-based models can augment human thinking, making workers more productive and students better able to learn. Anthropomorphizing LLMs makes it easier to incorporate them into our work and education. I think this may be the case in the short term, but pretending LLMs are people works against our understanding of transformer-based language models.

At its core, the debate over how to treat LLMs is about the relationship between computation and cognition. Enthusiasts don’t see a problem with using the concepts of human cognition to describe the behavior of LLMs. For skeptics, human thought is vastly more complex than the computational processes happening in even the largest models, and because LLMs do not understand context, they do not know anything. Using words like think, write, or speak to describe what LLMs do is a category error. As Edsger Dijkstra analogized, asking if a machine can think is as relevant as asking if submarines can swim.

Our attempts to understand LLMs founder on the semantic confusion created when we apply the vocabulary of human cognition to computational processes. When scientists use words like grokking to describe behaviors in transformer-based models, they imply machine processes such as matching patterns or extruding text are the product of a mind. Those implications are embedded in the name of the field, artificial intelligence, and in the words researchers choose when they write up their findings.

Attention!

Take Attention Is All You Need, the paper Wired Magazine says, has “reached legendary status” because it was the first to describe the deep learning architecture in LLMs called a transformer. The paper also popularized attention as a word to describe the information processing that occurs in a transformer-based model. Repurposing these words demonstrates a necessary part of modern scientific inquiry. Creating new knowledge requires developing the language to explain what has been discovered.

This process is often haphazard, borrowing terms from other fields of study or literature. For example, Grok is a term first used by science-fiction writer Robert Heinlein in Stranger in a Strange Land. Jacob Uszkoreitabou, one of the co-authors of the “attention paper,” was the first to use self-attention to describe a computational process that allows language models to account for relationships among different words in a sequence that are not near each other by assigning words a value or weight. He also came up with the term transformer, which sounds like it might have been inspired by electrical engineering. But according to Wired, Uszkoreitabou was just thinking about how the mechanism completely changes or transforms the information it takes in. Transformers also had fond associations with toys from his childhood.

Williams James described how scientific facts get articulated: “Truth grafts itself on previous truth, modifying it in the process, just as idiom grafts itself on previous idiom, and law on previous law.” He reminds us that this is a normal scientific process and that the words we use to formulate scientific theories or laws are “only approximations.”

Investigators have become accustomed to the notion that no theory is absolutely a transcript of reality, but that any one of them may from some point of view be useful. Their great use is to summarise old facts and to lead to new ones. They are only a man-made language, a conceptual shorthand, as some one calls them, in which we write our reports of nature; and languages, as is well known, tolerate much choice of expression and many dialects.

The choices Uszkoreitabou made when he and his co-authors wrote their report had consequences, intended or not. The choice of transformer yielded a technical term and an amusing anecdote. The choice of self-attention continues the problematic tradition of using words with meanings associated with human cognition to describe computational processes. It assumes a “self” that directs attention to information, inviting misunderstandings of what attention actually means in this context.

Using words like thinking, attention, and intelligence to describe computational processes creates semantic confusion even when the word is explicitly defined to fit in its new context. The authors of “Attention Is All You Need” were careful to define attention “as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key.”

To translate this a bit, attention is a statistical process that creates a weighted average of neighboring observed data. This means that when transformer-based machines use attention, especially what Uszkoreitabou calls self-attention, to model the relationships among the words computationally, the machine process generates better outputs. Attention in transformer-based machines does not mean it directs the flow of its processing to specific elements of its dataset, like how humans direct their attention to a word or phrase in a crossword puzzle. Nor does self-attention mean anything like attention to internal processes, the way a person might notice the pain in their foot when stepping on a lego. And it means nothing like self-reflection, a human attending to their own cognitive processes. An LLM has no sense of self, no way to direct its attention.1

What’s happening in an LLM is nothing more mysterious than applying well-understood statistical processes within a larger collection of computational processes. The mystery is why it works so well. Why do the outputs from transformer machines using this statistical process and this processing structure seem so much better than those from earlier machines? The answer is no one knows, at least so far. After all, this specific type of machine was just invented and is complex.

These vague intuitions ignore the more obvious and prosaic explanation: LLMs are simply more complex versions of AlphaGo, computational processes built to manipulate natural language rather than the playing pieces in Go.

We do know that humans don’t use a weighted average calculation to arrive at a coherent sentence when thinking about the answer to a question. As Cosma Shalizi points out, “What the neural network people branded ‘attention’ sometime around 2015” is nothing like what the word means in the context of human psychology. “The sheer opacity of this literature is I think a real problem.” Yes, even for the people who write it. AsWired says: “Though AI scientists are careful not to confuse the metaphor of neural networks with the way the biological brain actually works, Uszkoreit does seem to believe that self-attention is somewhat similar to the way humans process language.”

Thanks to the semantic confusion created by using words like intelligence and self-attention, many researchers share Uszkoreit’s belief that biological brains have some poorly understood but important similarities with LLMs. They have an intuitive sense that LLMs process information in ways similar to humans. Or, they think human thought is fundamentally computational. Or, maybe aspects of both explain the amazing leap forward in language capabilities of transformer-based models. These vague intuitions ignore a more prosaic explanation: LLMs are simply more complex versions of AlphaGo, computational processes built to manipulate natural language rather than the playing pieces in Go.

As any four-year-old can tell you, games like “pretend to be a robot” or “tell me a story” or “draw me a picture” are fun. More advanced games like “schedule a meeting” or “write an essay for my composition class” or “create an image for my substack newsletter” require contextual knowledge no four-year-old has, but they involve following rules and conventions. When LLMs master these and similar activities, they are playing open-ended, cooperative games. These games are different and, in most ways, more complex than Go, but they are still recognizably games. Ludwig Wittgenstein called them “language games,” a term rich with complex and sometimes contradictory meanings, but at its core, it means any use of words in a social context. LLMs play language games very well.

As ELIZA demonstrated in the 1960s, computers that use natural language, that turn the activity of typing information into a computer into something that feels two-sided, have weird effects on humans. We really like playing language games with computers! And sometimes, we get carried away. The Eliza Effect is the name for acting as though a machine is a conscious entity capable of thoughts and feelings. I’ve wondered how many who experience it actually believe their chatbot conversational partner is conscious. Is the Eliza Effect mostly make-believe? A willing suspension of disbelief to enjoy the game?

Computers

I have also wondered how many researchers working in what we used to call machine learning are thinking carefully about the distinction between computation and human cognition. How many are like Uszkoreit, assuming an equivalence based on semantic confusion? After all, the semantic confusion goes back to the beginning of digital computers. The meaning of the word computer itself is an interesting example of semantic shift.

From the time the word was coined in the seventeenth century until the 1950s, computer meant a human who performs complex mathematical calculations. That changed when digital computers came along. It made sense to apply the word to the machine because computation is precisely what these early digital computers did. The new definition of computer captured the essential nature of this new tool, applying a term that described a human cognitive function to a machine.

This is a straightforward example of how science uses language to “summarise old facts and lead to new ones.” Describing digital calculating machines required a new vocabulary to describe their functions, so the meaning of a word was updated. The process did not stop there. A constellation of new, overlapping interdisciplinary fields— cognitive science, computer science, data science, electrical engineering, information science, and neuroscience—emerged to explore the new knowledge being created as scientists built these digital computers. The conceptual vocabulary they developed borrowed heavily from human psychology and followed the shift in the meaning of computer. The vocabulary they established assumed a relationship between cognition and computation by applying terms like intelligence, attention, and thinking to computational processes. Incorporating natural language into the computational inputs and outputs reinforced intuitions that there was something like a human mind at work behind or underneath all that computation.

Joseph Weizenbaum was an early AI researcher who became skeptical of the anthropomorphizing tendencies of his colleagues. He created ELIZA and coined the term Eliza Effect. Weizenbaum was dismayed by how easily users of his experimental natural language machine deluded themselves into believing that a simple computer program was providing insight and understanding. In Computer Power and Human Reason: From Judgment to Calculation (1976), he suggested there are limits to a research program that assumes machine processes are equivalent to human thought.

But at bottom, no matter how it may be disguised by technological jargon, the question is whether or not every aspect of human thought is reducible to a logical formalism, or to put it into the modern idiom, whether or not human thought is entirely computable.

If Weizenbaum is right, if the computational paradigm misunderstands the relationship between computation and cognition, then how has artificial intelligence successfully built such new and powerful machines? The answer is that its success flows in only one direction. Using the vocabulary of human cognition to design computers has led to extraordinary technologies, including a global information network and powerful pocket computers. And now, transformer-based models play language games and generate cultural artifacts that are sometimes indistinguishable from those created by humans. The computational paradigm Weizenbaum criticized has been extraordinarily successful…for the purpose of building computational machines and models.

Computational theory has had less success in explaining how the computational processes of LLMs are at all similar to what happens in the human brain. Terms like intelligence and consciousness are no closer to being measured or observed than they were a hundred years ago. Computers that play human games well have not meaningfully updated how we understand cognition. There have been breakthroughs in treating diseases of the brain, but what psychology or philosophy can tell us about consciousness has changed very little from the days of William James. This is true even though we have, thanks to digital computers, new ways to measure and observe brain activity. We have lots of data but no conceptual framework to help us understand what we observe.

In The World Behind the World and in essays posted on

Erik Hoel argues that little significant progress has been made in neuroscience over the last few decades. The lack of scientific agreement about what consciousness is why. He argues that neuroscience is pre-paradigmatic. I am convinced that consciousness, or rather the impossible vagueness of the concept, explains something important about the scientific disciplines clustered around the project of building intelligent machines.Generative AI shows the power and the limits of the paradigm that created it. Having achieved transformer-based models that play language games and produce cultural artifacts, researchers use terms like self-attention without explaining or defending the assumptions behind the word self. There is an incredibly well-funded research and engineering project trying to build intelligence but it has no agreed-upon way to define or measure the term. Artificial intelligence has taught us a great deal about machines and nothing about thinking. Consciousness is why.

Part Two of this essay covers Alan Turing’s influential solution to the problem that consciousness poses for artificial intelligence, how science fiction explains why that solution no longer works, and the advantages of thinking about LLMs as a cultural technology. As usual, William James helps me pull all this together.

Want to receive future essays like this one?

𝑨𝑰 𝑳𝒐𝒈 © 2024 by Rob Nelson is licensed under CC BY-SA 4.0.

Here is William James’s definition of attention:

Every one knows what attention is. It is the taking possession by the mind, in clear and vivid form, of one out of what seem several simultaneously possible objects or trains of thought. Focalization, concentration, of consciousness are of its essence. It implies withdrawal from some things in order to deal effectively with others, and is a condition which has a real opposite in the confused, dazed, scatter-brained state which in French is called distraction, and Zerstreutheit in German.