Some readers may recall that I abandoned my weekly cadence of posts earlier this year to focus on long-form essays. While my experiment writing on the internet, now barely a year old, was much improved by this change, I now start more essays than I’m able to finish. The ideas, half-worked out, stay in my head, distracting me from the longer pieces I’m trying to complete.

Logpodge is the perhaps too-cutely named answer to this problem. These will be collections of short takes on topics occupying what we use to call the blogosphere. By clearing out the detritus, I can focus writing…

essays about happenings in educational technology where my knowledge of how schools and universities work may shed light on topics in the news;

review essays of books that helped me understand generative AI and other forms of computational intelligence as a cultural technology rather than as the creation of alien consciousness;

long-form essays exploring how the ideas of philosophical pragmatists, especially William James, make sense of our current cultural and technological moment.

On Beyond Chiang

A new Ted Chiang essay on AI is always an occasion. His “Will A.I. Become the New McKinsey” remains the essential critical essay on AI from last year. This one from 2017 explains so much of what has happened with Silicon Valley and AI over the past ten years that it should take the top spot when NYT does its Best 100 AI Essays of the 21st Century.

Like other writers on the internet, I found the responses to his latest piece more interesting than Chiang’s actual defense of human creativity. I consider myself loosely affiliated with the Bender/Chiang/Gebhru school that holds LLMs don’t have minds and are not intelligent, but there is only so much poking at the bubble that is worth doing. Besides, these days, confidence artists and AI enthusiasts seem increasingly on the defensive, although it seems Strawberry has created a brief reversal.1

R Meager, who writes the excellent

, appeared in my feed to tell me to read this essay by Celine Nguyen. I took Nguyen’s piece as a response to Chiang even though it appeared earlier, not only because I read it after, but because it pushes past the premise of his skeptical, human-centric critique of the political economy of what Silicon Valley thinks they are selling. Nguyen is more interested in what generative AI can do for artists, especially those who are interested in randomness in the creative process. Her essay opened doors and windows into the stuffy room where I’ve been contemplating the value of LLMs.Henry Farrell, who writes the excellent

, takes Chiang's essay as another opportunity to explore what he calls Gopnikism. This is the view that we should stop treating LLMs as proto-agents with proto-minds and instead think of them as a cultural technology, like the printing press or the graphite pencil.2 Farrell’s piece brings in Brian Eno to illuminate the cultural potential of generative models. Like Nguyen, Farrell considers how LLM’s fundamental structure—the mathematics of probability applied to massive amounts of cultural data—creates a random but recognizable something. This something is not necessarily art, but it can be interesting and useful.The unpredictability of LLM’s outputs is the source of what Ethan Mollick calls their weirdness, which he wants us to embrace, arguing that if we think LLMs are like previous software, we’re missing this technology’s real potential. Using Eno as a point of departure, Farrell echoes Mollick’s commitment to experimentation and Nguyen’s interest in seeing generative models as a method for both containing and extending the operations of chance in a creative process.

I don’t think that it is going too far to say that his approach suggests a set of ideals not just for experimental music, but for art and culture more generally, as a form of social organization centered around fruitful directed experimentation. Cultural forms, from the little to the large, should not be chaotic, but they shouldn’t be too rigid either. They ought be adaptive to their environment.

Nguyen also references Eno, relating his observation about how the passage of time alters our perception of a medium’s flaws to our current moment:

“The unrealistic faces, the jerky motions, the occasional plunge into uncanny-valley territory. But for those flaws, I can’t help but think of a much-quoted passage from the musician Brian Eno’s A Year with Swollen Appendices, a diary and essays from 1995:

Whatever you now find weird, ugly, uncomfortable and nasty about a new medium will surely become its signature. CD distortion, the jitteriness of digital video, the crap sound of 8-bit — all of these will be cherished and emulated as soon as they can be avoided. It’s the sound of failure: so much modern art is the sound of things going out of control, of a medium pushing to its limits and breaking apart. The distorted guitar sound is the sound of something too loud for the medium supposed to carry it. The blues singer with the cracked voice is the sound of an emotional cry too powerful for the throat that releases it. The excitement of grainy film, of bleached-out black and white, is the excitement of witnessing events too momentous for the medium assigned to record them.

The words weird, ugly, uncomfortable, and nasty are all words that describe my response to the cultural outputs generated by transformer-based large models. The mediocrity of their outputs, the bland sameness of the prose or images, is discomforting, not least because it is so difficult to tell for sure if a human or a transformer-based model was its source. Will those aspects be as discomforting in ten years? Will the cultural garbage being mass-produced by this technology be both a marker of this historical moment and the foundation of new creative processes? If Nguyen is right, the answer to both is yes.

I’m not sure what this insight has to do with the value of generative AI to writing or education, but the reminder that the passage of time alters the meaning of cultural artifacts feels important. What an output means right now is only a dim outline of what it might mean in a year or ten.

On Beyond Anthropomorphization

My brother uses a robot voice when he talks to his phone. This was amusing and distracting the first few times he did it with me in the room, but eventually, I barely noticed. He’d trained me to tune out his weird, mechanical voice as he dictated memos and emails directly to his device. This training has made hanging out while we’re both working remotely much easier!

I think this behavior is a useful way to think about natural language interfaces. My frustrations over the past decade when talking with Siri or Alexa might have been lessened had I used my robot voice. Even better if the bot had adopted it. The perfectly helpful and competent female voices who answer my queries promise far more than they deliver. How much easier it would be if I adapted my mindset to the interface by thinking like a robot, having us both speak as machines instead of expecting the machine to think like a human.

The rush to create digital twins, reanimate dead philosophers, and program artificial friends are such limited uses of transformer-based cultural tools. Why imagine them as minds when we can treat them as weird and interesting interfaces to oceans of data about human culture and history? Why invest so much in making them amusing conversationalists when they can do so much more than chat with us?

My experiments in the classroom this year have not yet involved talking to JeepyTA using a robot voice. Instead, my class is using the tool to try to enliven our asynchronous discussion board. This has always been a dead zone of class activity. I want students to try out ideas and share insights, but the reality of online discussions has always disappointed. If I use incentives or requirements to get students to post, the students hit their mark and only that. If I leave it optional and invite my students to post as they will, no one gets around to it more than once or twice.

The wonderful team at the Penn Center for Learning Analytics has set up JeepyTA to help jump-start the online discussions in my class, or at least give students immediate feedback just after they post. I’ll be posting about this and other experiments at AI Log Teaches.

What problem is it that are you trying to solve?

I suggested back in July that this academic year would see growing skepticism about LLMs as personalized teaching assistants. The entrepreneurs rushing into this market will discover just how much institutions are willing to pay for a limited tutorbot. There are other potential educational uses of LLMs and one that is coming into focus is directly related to Farrell’s point that new “cultural forms ought be adaptive to their environment.”

Ed, the now-disgraced LA school district’s chatbot, has become a favorite example of the hubris of AI enthusiasts and clueless bureaucrats. But Ed had potential. Among the points I made as I predicted the disaster that unfolded was that using an LLM to help navigate a complex educational bureaucracy, “providing a tool that translates school bureaucratese into Tagalog or Persian,” is an answer to a real problem.

The mistake LAUSD made was to buy the Silicon Valley edtech dream of personalizing the experience. This turned what could have been an interesting experiment into a disaster due to inadequate data security controls and an overly ambitious scope based on the misbegotten fantasy of personalized learning.

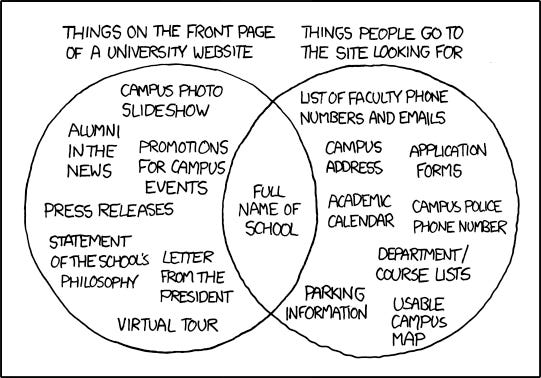

There are educational uses of LLMs that do not require personalization, like solving this problem, as captured by Randall Munroe.

A college website’s main purpose is marketing to prospective students and donors, leaving current students ill-served. A portal or main landing page for current enrolled is the usual solution, but the distribution of student services across various academic, student life, and administrative departments makes this challenging. Bureaucratic strife and fragmentation mean that it is far easier for each division or unit to create its own digital strategy for student success. Or, more accurately: develop a mildly crappy website that gets updated only when a new IT director gets hired.

You see the problem. A student looking to answer a simple question about a graduation requirement or a policy has to understand the bureaucratic structure of the institution to know what website or policy handbook to consult. Students must learn to see the institution as the bureaucrats see it: an interlocking puzzle of fiefdoms, each concerned primarily with justifying its budget while supporting students through its specific mission.

Ivy.ai seems to have a potential answer to this problem. Their approach tackles the problem one fiefdom at a time. They will hook up their chatbot to a specialized database of materials and websites tailored to whoever is picking up the tab. You only need one office to sign on to get started. If another office decides they want to join, it is easy enough to add content to the primary materials the bot accesses. This incremental approach to adoption is well-suited to large universities. I have only seen a demo and done a little nosing around, so this is not an endorsement, just an observation based on limited information.

I learned a lot about this problem by talking with the founders of a start-up called Lucy over the past year. I recorded an interview with Grégory Hissiger, Thomas Perez, and Mathieu Perez, hoping to write something similar to this piece with Will Krasnow about how young AI entrepreneurs think about their work, but the dynamic of a four-way conversation made that too complicated.

Like other young developers I’ve talked with over the past two years, the Lucy team was working on a Socratic, course-based chatbot with one of their professors and looking to expand the range of courses it would support. This summer, they pivoted to developing an academic advising assistant, taking on the general problem of navigating a bureaucracy but focused on academic support. They are now in talks to create pilot projects with Harvard and Penn. I would be happy to put readers in touch with the team. Just contact me through LinkedIn.

What I like about the Lucy team’s approach is that they are focused on answering the easiest and most common questions a student might ask in an important but narrowly defined set. They aim to answer the sort of question that a student might schedule an appointment to ask an advisor, only to discover the answer is readily available if the question is formulated in the correct institutional language. The key to getting this right is presenting the correct answer to straightforward questions while directing students to the correct human-staffed office for the messy, complicated questions. Confabulating a bad answer to the latter sort of inquiry, even once, would be disastrous.

Will it work? I hope to say more about the pilots in a future essay.

𝑨𝑰 𝑳𝒐𝒈 © 2024 by Rob Nelson is licensed under CC BY-SA 4.0.

I am fascinated by how many smart people spend a few hours playing with the latest models and then write essays suggesting a great breakthrough has occurred. Marveling at how LLMs “reason” or “think” misses the fundamentals of how they work. There is so much semantic confusion created when we analogize these tools to the human mind.

Just because the models can now generate text that describes step-by-step reasoning is not evidence they have minds or that they think. It simply means their application of the mathematics of probability to human language has incrementally improved their ability to mimic human language. I guess this is a game-changer in language games like crossword puzzles, but I do not think anyone has a clear idea of what value is generated by an LLM providing a verbal description of step-by-step processes.

Farrell’s essays in The Economist, written with co-authors, are an excellent introduction to this framework. Here is the latest with Marion Fourcade, who is the co-author of the best AI book this year so far, The Ordinal Society.

I really find this debate about AI and creativity compelling. I am excited about how the terms and frameworks will continue to evolve in the coming days. Gopnik's foundation is so rich because it allows such theoretical variability within--or so it seems to me--or at least this is what you appear to be documenting in this post. I have been contemplating a project that investigates the metaphors we use to characterize AI with cultural technology or artifact acting as something of a primary lens. It seems like we need to do some deep archeology of concepts right now as we muddle our way through this extended trough of disillusionment.