This essay is the third in a series about how I used generative AI in a class I taught in the fall term of 2024. Part 1 and Part 2 are available here.

Questions

I learned early on that trying to teach the same course twice is impossible. Heraclitus’s famous line about stepping in the same river twice applies. It is not the same river, and you are not the same person. I was teaching History of American Higher Education for the fourth time. It would not be the same course, and I would not be the same teacher.

My strategy to manage this reality is experimentation, but maybe not the sort of controlled experiment that comes to mind when you hear that word. I am interested most of all in the experiences of my students. Not so much generating data about those experiences but joining students in a stream of shared experiences that we reflect on and make sense of together.

This approach is consistent with how I think about the teaching of writing, especially the teaching of writing about history. Teaching. Reading. Writing. Learning. These discrete activities are not so discrete when you put them in motion. Then, they become actively entangled in the conversational play of a classroom. My experiments have always been about those four types of experiences conceived as a whole. What happens when we add the experience of working with an LLM?

There were two questions about using an LLM that I wanted to explore with my students, questions that had guided the design of the class.1

Would JeepyTA enliven the class asynchronous discussion board by providing an immediate and useful response to student posts?

Would JeepyTA provide feedback prior to in-class peer review activities in ways that students found helpful and that I believe strengthened the learning process?

Would JeepyTA enliven the asynchronous discussion board of the class by providing an immediate response to student posts?

Since the days of WebCT, I have been trying to tap into the energy of early Internet bulletin boards and chatrooms through the use of class discussion boards. Successful SAIL class activities depend on students doing intellectual work before class. Discussion boards seem like a way to share such work, making it visible to the other members of the class and starting conversations that can be continued in class. This has never happened to my satisfaction in any of my previous classes.

There is seldom any sustained discussion on the boards. Occasionally, a student will say something that informs a discussion at the next class meeting, but these are one-offs. When I try to generate discussions with my own eager posts or replies, I get what sounds to me like awkward silence in reply. When I try creating incentives for students to reply to posts, I get grudging but polite compliance, nothing like the energy of a live class discussion.

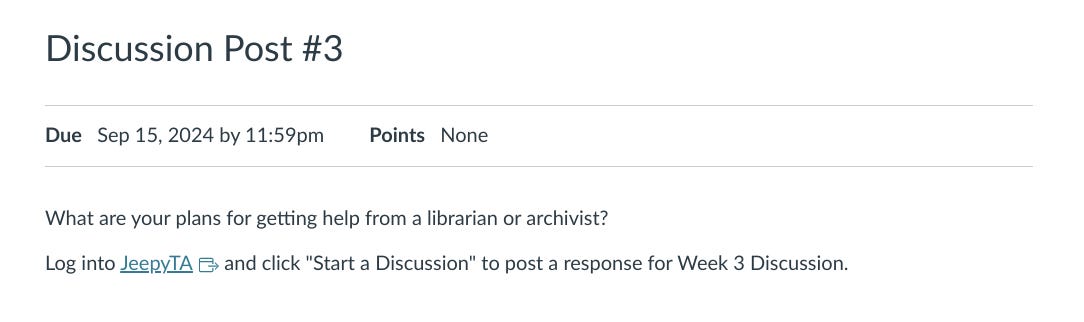

I thought JeepyTA might help change that, so I crafted six discussion post assignments asking students to post answers to questions like “What are one or two primary sources you might use for the long-form research paper?” and “What are your plans for getting help from a librarian or archivist?” We configured JeepyTA to respond immediately and helpfully. I hoped those outputs would kick off a more lively exchange, prompting responses from students.

That didn’t happen. JeepyTA responded at length and enthusiastically, emulating the sort of thing I might say (and sometimes did) in response to the posts. But those replies did not lead to substantive online discussion. However, the students’ reactions surprised me in one aspect.

JeepyTA repeated and extended some of the guidance I gave in class in ways students found useful. For example, JeepyTA’s response to the student posts about contacting a librarian or archivist was something several students found helpful. This task is something I emphasize early on because finding good primary sources is essential to writing a successful research essay. Sometimes, a student has a college identified and a great idea for a thesis but isn’t able to access the sources to make it work.

We meet at the University Archives on the third day of class so students can talk with the helpful and friendly librarians and archivists at Penn. They emphasize the importance of reaching out to professionals at the institutions students are researching. Despite its importance, and my offers of support, some students struggle to do this.

I think JeepyTA added value by mitigating this problem in an educationally useful way. Since every student had to post an answer about their plans, every student received JeepyTA’s help. Here is the response one student received.

Identify the Right Contact: Start by visiting the Columbia University Libraries website. Look for the section about their archives or special collections. Usually, they list specific contacts for different collections or topics.

Set a Deadline: To keep your project on track, I recommend setting a deadline for when you will send this email. How about aiming to send your email by the end of this week?

Draft the Email: When drafting your email to the librarian or archivist, consider including:

A brief introduction of yourself as a student from the University of Pennsylvania studying the history of higher education.

A concise explanation of your research interest, particularly how it relates to Columbia’s historical timeline in comparison to Penn.

Ask about specific primary and secondary sources that may be relevant to your research.

Mention any specific themes or events you are interested in exploring.

Inquire about the possibility of visiting in person, if that’s feasible for you, or accessing resources digitally.

These are pretty good suggestions! JeepyTA provided similar guidance to each student, reiterating strategies we had discussed in class for how to approach the task. It even went further, providing a template for an email that each student could adapt for the assignment.2

Conclusions #1

This automated something I would have happily done myself if asked. But did it replace something I should have done as a teacher? I don’t think so. The value JeepyTA offered was immediacy. Instead of waiting for me or another member of the teaching team to respond to a request for help (something not all students would do), each student immediately received guidance they could turn into action.

My conclusion is that I should abandon my attempts to enliven asynchronous discussions and instead think about the discussion board as a way to support students in specific tasks. By harnessing the LLM’s feedback on topics we have discussed, its role is to reiterate class discussions and help students take steps on out-of-class activities. JeepyTA’s responses are visible to me, the teaching team, and the other students. If it gets something wrong, we can correct it. When it gets something right, which it mostly did, the output is available to the student at the moment they are working on the task.

I am not sure how I feel about JeepyTA providing a draft of the email, but I would rather JeepyTA do this than ChatGPT because I am able to review JeepyTA’s outputs, correcting or extending them if need be. I can also update the instructions to JeepyTA, improving the outputs. For example, next time, I will instruct JeepyTA to leave more blank spaces in the email template for students to complete.

Would JeepyTA provide feedback prior to in-class peer review activities in ways that students found helpful and that I believe strengthened the learning process?

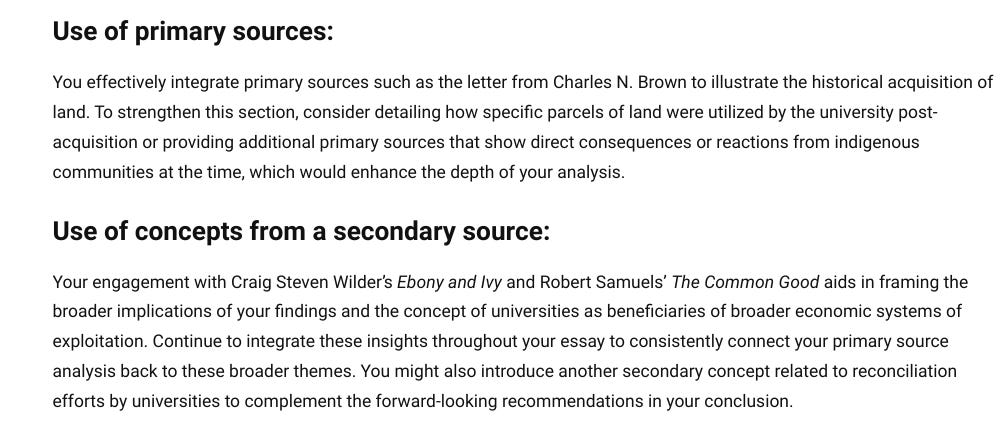

Rather than have JeepyTA emulate how I give writing feedback in order to substitute for feedback I would give, I conceived its role as providing an additional layer of feedback, a preliminary step each student takes before showing a draft to a peer or a member of the teaching team. We configured JeepyTA to give this feedback by post-training it on the assignment and examples of previous first-draft feedback. The training materials included what I call my key terms for evaluating student historical writing, a handout we discussed in class the week before the first draft of the final essay was due and continued to use as a framework for discussing their essays.

Again, the goal here was to add a layer of feedback, not replace one. When the students completed the first draft of their essays, they submitted it to JeepyTA, which generated feedback using the key terms and patterns from the archive of my previous comments on first drafts. I made it clear that this experimental feedback from JeepyTA should be treated skeptically. If JeepyTA says something weird or wrong, that would become an opportunity to engage AI outputs and discuss the vocabulary we use to review the papers.

For our first peer review workshop, I printed out each student’s draft and included JeepyTA’s comments. In groups of three, students read each draft and added their evaluations and suggestions, including assessing JeepyTA’s feedback. The collision of uneven rough drafts and LLM outputs produced some mistakes.

The suggestion about primary sources used in the draft is perfectly fine, exactly the sort of thing that we would take up together in class by exploring how to find additional primary sources that add context or depth. But in the next section, JeepyTA’s output confused two of the secondary sources. This student used For the Common Good by Charles Dorn and Why Public Higher Education Should be Free by Robert Samuels to frame their analysis and, at one point in their draft, confused the two books. JeepyTA extended that confusion in its output. Once we identified this mistake, the reviewers pointed the student back to the draft and the bibliography to make corrections.

Overall, there weren’t many examples of the LLM outputs being wrong, and when it made mistakes, they seemed to jumpstart our work in peer review.

Critical feedback is good feedback

JeepyTA’s feedback was critical more often than I had expected. The screen capture above is an example of the sort of feedback I frequently give about a first draft. Students often struggle to make explicit connections between concepts from a secondary source and a paper’s thesis. The student quoted an essay by Isabela R. Morales, the founding editor of The Princeton & Slavery Project, but had assumed rather than made the connection to the paper’s thesis. The draft left the reader unclear about how Morales’s descriptions of early presidents of Princeton as slaveowners related to the concepts and ideas the paper was exploring. This feedback became an opportunity for the peer review group to talk about how to make such connections clear in the draft and, more generally, how to build out a conceptual framework for their essays using secondary sources.

My guidance during the first peer review emphasizes the idea that critical feedback is a gift. I argue that criticizing the work of your peers, politely and clearly, is essential to scholarship. ChatGPT and other LLMs tend to be cheery and encouraging, and, unless they are effectively prompted, don’t give critical feedback. I had thought the students would start the peer review conversations by criticizing the outputs for not being critical enough. That did happen a bit, but more often, they started with some criticism or suggestion from JeepyTA’s output and talked about how to address it in the writing.

Conclusions #2

The depersonalized nature of JeepyTA’s criticism seemed to add value to the peer review that I had not anticipated. Instead of figuring out how to criticize a peer’s paper, the students worked with a machine-generated artifact containing critical analysis. They evaluated that LLM output and then used it as a launching point for their review of the drafts.

You might ask: why didn’t I provide this feedback? After all, it’s my job, right? Nope. My job is to construct an effective learning process. I am trying to create structured learning activities for my students, not telling them how to write better. My practice has long been to refrain from detailed critical comments on a student’s first draft. As the person who will assign the final grade, students tend to treat my feedback as a formula for getting an A, not advice on developing their ideas. Their peers, and now possibly an LLM, are better sources for feedback on early drafts. They might still be aiming for an A, but peer activities are less transactional. Eventually, once students have a fully developed draft, I give feedback through a collaborative grading process, but I don’t start there.

For the initial peer review workshop, I am trying to create an experience for my students to evaluate their historical writing and that of their peers using a shared framework. Creating a community of inquiry means I step back and let the students figure it out more or less on their own. Did adding JeepyTA outputs defeat this goal? Honestly, I am not entirely sure. But the experience at the initial peer review workshop made me more comfortable with continuing to use an LLM tool as an aid to teach writing.

The students seemed to move more quickly to applying the key terms to specifics in each draft than in my previous classes. In my experience, that first peer review workshop is often about overcoming a student’s reluctance to criticize a peer’s writing. It felt to me as though we got to genuine peer review much faster by including JeepyTA’s outputs. The students said that including the comments along with the drafts was helpful. From what I observed, this was true.

Framing this as an alternative to the students using ChatGPT made me even more pleased. JeepyTA was aligned with the course objectives and tuned into the concepts we were using to evaluate historical writing. Of course, students had the freedom to use ChatGPT or another LLM in the wild to get feedback, but why would they?

The consensus of the students was that JeepyTA’s feedback was helpful but not as much as the feedback from their peers, the teaching team, and me. That feels right to me. At the last class meeting, my students and I talked about what I should change about the course the next time I teach it. Given how helpful they found JeepyTA, I was surprised at what they thought I should do the next time I teach the course.

My next essay, the final of the series, will explore their recommendations about how I might use JeepyTA and other forms of generative AI in future classes.

There is more about JeepyTA in earlier parts of this essay. I’m repeating these links to this guide and this preprint by Ryan Baker, Maciej Pankiewicz, and Xiner Liu. 2024. “A Step Towards Adaptive Online Learning: Exploring the Role of GPT as Virtual Teaching Assistants in Online Education.” EdArXiv. July 31. doi:10.35542/osf.io/rw45b.

You can see all the prompts for the discussion posts here. My initial strategy for writing prompts benefited greatly from materials available in the OER Commons and the collection of materials in More Useful Things: AI Resources created by Ethan Mollick and Lilach Mollick. Rachel Liu and Maicjec Pankiewicz guided the development of these prompts, helping me understand what the model required to produce effective outputs and test those outputs.