Coscientist

Doing science is work. Work can be boring. As students figured out last year, generative AI is pretty good at doing some kinds of boring work.

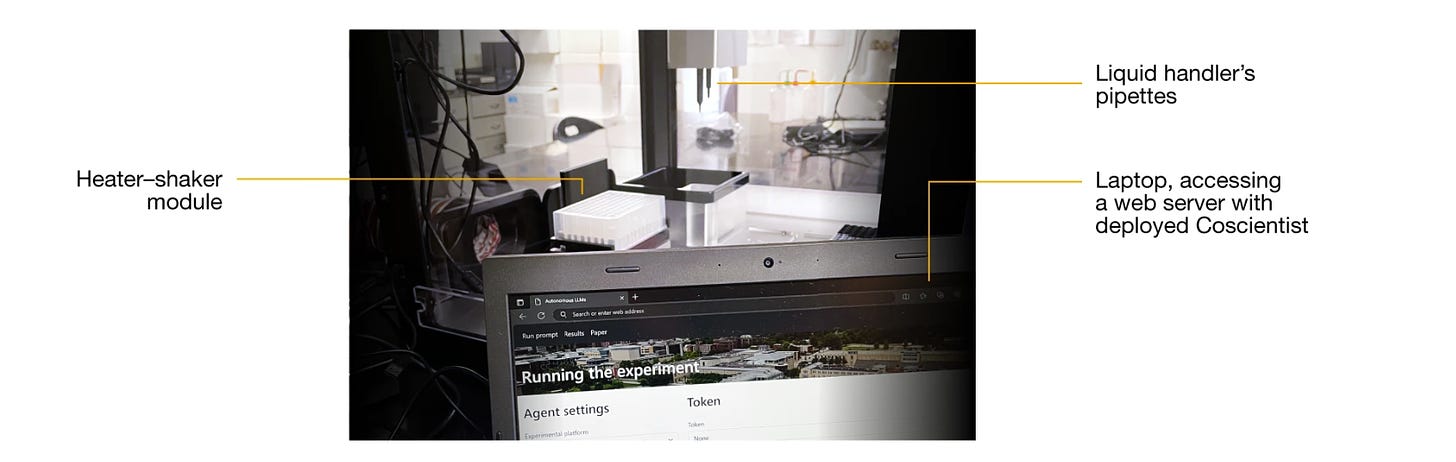

A new paper in Nature suggests that GPT-4 will be using its ability to predict language, including specialized scientific language, to do a lot of boring scientific work. The team hooked up some lab equipment to an API and used different OpenAI models to manage multiple tasks including web searches, document searchers, and running physical experiments in the lab. The claim of “chemical reasoning” is quite narrow and appears to mean that they used an LLM to optimize the yield in a chemical reaction through an iterative process. Folding proteins this is not.

Still, this is very cool! So, expect it to be hyped to the max.

Predictive Algorithms are bad news

The Markup has a follow-up to its story about Wisconsin’s Dropout Early Warning System (DEWS). DEWS is a predictive system designed to identify students at risk that uses race as a factor in its evaluations. Or did until The Markup’s reporting last spring. This week’s story is a first-person account by two students who were subject to the system and is part of the series — Machine Learning and Impact.

Leadership

Leading an institution of higher education is a thankless job, which is not exactly news. However, I think it is worth contemplating Dan Drezner’s analysis in light of how important the next few years will be to the role research universities will play in creating our AI futures.

𝐀𝐈 𝐋𝐨𝐠 is published every Friday morning. To receive each weekly log in your email inbox, visit my blog on Substack. To receive a notice each week in your LinkedIn feed, click here.