The long and the short of our confidence in AI

𝐀𝐈 𝐋𝐨𝐠 reviews AI Snake Oil and The Ordinal Society

Things are in the saddle,

And ride mankind.

There are two laws discrete

Not reconciled,

Law for man, and law for thing;

The last builds town and fleet,

But it runs wild,

And doth the man unking.

"Ode, Inscribed to William H. Channing"—Ralph Waldo Emerson

During the 2010s, there was a growing sense that among the things being broken in our move-fast digital economy were people’s lives. Algorithm became a dirty word. People woke up to the tragedy of the internet commons. The unicorns and fairy dust of Silicon Valley seemed less magical in light of sitcom parodies, unexplained account suspensions, algorithmic discrimination in hiring, measurable deterioration in adolescent mental health, false imprisonments, and evenings lost to doom-scrolling.1 At the end of the decade, The Age of Surveillance Capitalism and Race After Technology: Abolitionist Tools for the New Jim Code were published, journalists like Karen Hao and Kashmir Hill were raking the muck, and computer scientists like Joy Buolamwini, Timnit Gebru, and Olga Russakovsky were explaining why bias is a critically important concept in what some people still called machine learning.

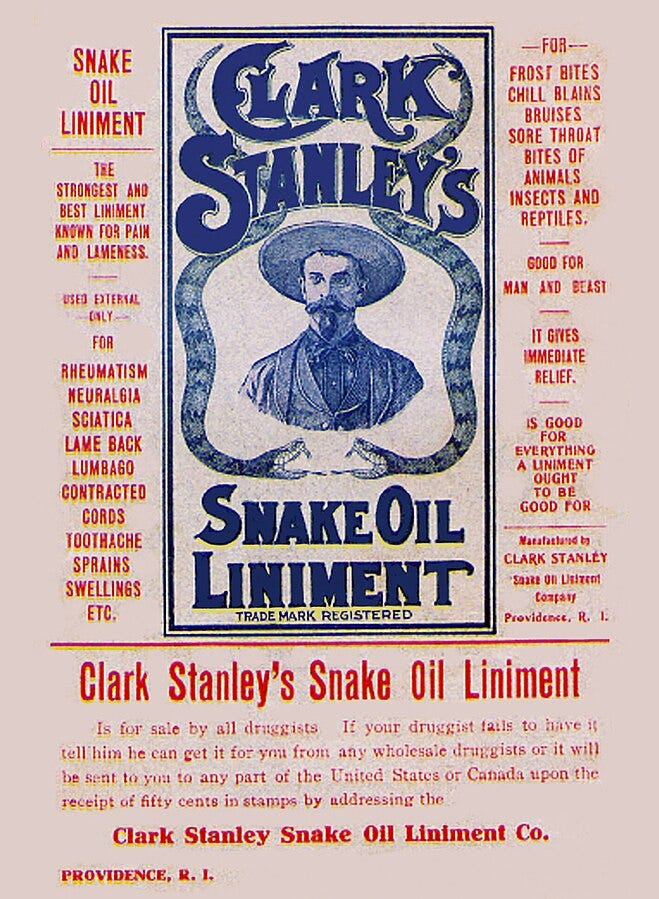

AI Snake Oil

AI Snake Oil originated in this moment. Arvind Narayanan, then an associate professor of computer science at Princeton, gave a talk in 2019, dismantling hype-infested claims about AI. Thanks to attention on a social media platform known as Twitter and a Corey Doctorow post on Boing Boing, the slides were “downloaded tens of thousands of times.” How to recognize AI snake oil was a well-timed contribution to a growing critical analysis of Silicon Valley shenanigans. When the book project was announced on a new Substack in 2022, here is how Narayanan and his co-author, Sayash Kapoor, situated their work:

Fortunately, there’s a big community pushing back against AI’s harms. In the last five years, the idea that AI is often biased and discriminatory has gone from a fringe notion to one that is widely understood. It has gripped advocates, policy makers, and (reluctantly) companies. But addressing bias isn’t nearly enough. It shouldn’t distract us from the more fundamental question of whether and when AI works at all. We hope to elevate that question in the public consciousness.

Their Substack became a guide to how the companies selling artificial intelligence and the researchers and journalists writing about what was marketed under that “umbrella term” distracted us from questions about whether and how it works. It has established Narayanan and Kapoor as among the most visible skeptics of Silicon Valley’s bullshit.2 They dismantle the misleading claims of technology companies, journalists, and academics by writing clearly about how the technology should be understood and evaluated. Crucially, they provide social context, not just technical insight, to make sense of how genuine technological progress, as well as ballyhoo and fraud, have shaped the market in products “powered by AI.”

Consumer markets and rational faith

That market changed dramatically just as the two started writing. One of Narayanan’s early posts about students turning in machine-generated essays anticipated what happened in late 2022 when OpenAI hooked up GPT-3.5 to a chatbot interface and accidentally created The Homework Machine.3 The mobilization of hype that accompanied the fastest-growing app in the history of apps! greatly expanded what his viral slide deck had identified as a “massive effort to influence public opinion” about the likelihood of a major breakthrough in developing “high-level machine intelligence.”

ChatGPT was, as the enthusiasts insist, a game-changer, and one game it changed was the selling of AI snake oil. The book traces a shift that begins with the predictive AI that predominated in the 2010s before moving to generative AI and content moderation AI. These distinctions are not just technological. They mark the borders of different markets in AI snake oil, each with its own history, dynamics, and hype-driven marketing strategies. ChatGPT, with its surprising capabilities to engage in amusing conversation, sparked speculation about artificial general intelligence (AGI). The sense that transformer-based AI models have brought us close to human-like intelligence has been powering AI hype ever since because, as Narayanan and Kapoor say, “the technology is powerful and the advances are real.”

The habit of confident prediction, especially when expressed probabilistically, gives a rational sheen to the most unhinged speculation.

Of course, “powerful and real” does not mean “high-level machine intelligence,” but thanks to that “massive effort,” there is more talk of AGI than inquiry into the actual advances. Narayanan and Kapoor use what they call the “ladder of generality,” where “each rung on the ladder represents a way of computing that is more flexible, or more general, than the previous one” to explain the development of generative AI. ChatGPT represents a breakthrough in deep learning, a new rung, in that it makes computational power more accessible. Anyone can now program a computer by simply speaking or writing in natural language. No coding is required. This change “has turned AI into a consumer tool,” and its arrival seems sudden, but “this suddenness is an illusion” because the ladder has taken eighty years to build, and its history includes many more mistaken predictions and deadends than actual breakthroughs.

Narayanan and Kapoor’s concise retelling of that eighty-year story—a story that centers on the perceptron and computer vision rather than on Alan Turing and his language-based test—has convinced me to stop using Large Language Models (LLMs) as a synonym for generative AI models. Their language capabilities are important, but word vectors and language games provide too narrow an understanding of how AI models classify and represent data. More important, the book’s description of generality in computing helped me better understand deep learning’s use of neural networks and how the new AI models impact the ways humans relate to data.

I am a cultural historian, so I appreciate nontechnical, historically minded descriptions of computational processes. Narayanan and Kapoor explain what they call “a profound shift perspective” due to the elimination of “the need to devise new learning algorithms for different datasets.” Technologists no longer need to select “a model based on an expert understanding of the data.” They can now use the same type of model, making adjustments to the architecture of the model by changing the “number of layers and patterns of connectivity between the neurons.” This new relation between model and data underlies the impressive capabilities of these new models. And, it means that with the right datasets bureaucratic organizations can use these new tools for general purposes, which includes the potential to replace human labor.

As Narayanan and Kapoor observe, the goal of generality in computing is connected to efficiency and “is a special case of the fact that capitalist means of production strongly gravitate toward more automation in general.” The idea of automating knowledge work has produced much excitement among technology barons and concern among the newly attentive public. This helps explain why discussions of AGI are so often conducted using the vocabularies of dystopian science fiction and eschatological religion.

It may seem as though we are simply talking about technology, but when it comes to AI, we are talking about whether we can or should use the technology to attempt to know the unknowable, what William James described as “our indomitable desire to cast the world into a more rational shape in our minds than the shape into which it is thrown there by the crude order of our experience.” Using AI models to try to predict academic failure, criminal behavior, disease, and job performance is one expression of this desire. So, too, is using AI to moderate the ugly and harmful discourse on social media platforms. The belief that there is some form of computational intelligence that will exceed the general capabilities of human beings to solve these and other social problems is the ultimate expression of faith in rationality.

What’s in that bottle?

Prediction is a form of control, and because we so desperately want control, it is easy to overestimate the power of predictive methods and machines. Narayanan and Kapoor point out that older predictive systems “based on the positions of the stars, or tarot cards, or lines on the palm” are, like modern science, “structured ways to predict the future.” We have reason to prefer the methods of science, but there are limits to what science can predict, which can make scientists appear no better than fortune tellers and astrologers. This is especially true when academics prognosticate instead of explaining how science works.

The habit of confident prediction, especially when expressed probabilistically, gives a rational sheen to the most unhinged speculation. Narayanan and Kapoor don’t use the term, but one of the book’s central points is that we need more fallibilism, a recognition that when it comes to science, we know nothing with absolute confidence. Such uncertainty eases the way for con artists to make fraudulent claims, but identifying snake oil is not just about detecting bullshit; it is also about evaluating the social harms that come with genuine advances. For example, Narayanan and Kapoor write, “The biggest danger of facial recognition arises from the fact that it works really well, so it can cause great harm in the hands of the wrong people.” Many skeptics are so focused on proving AI doesn’t work that they miss it when AI works exactly as intended, sometimes with disastrous consequences for individuals or society.

Given its focus on demystifying the technology, AI Snake Oil provides a surprising amount of cultural analysis, not only reframing the history of AI, but also an overview of social media moderation that makes clear why the “tug of war in which recommendation algorithms amplify harmful content and content moderation algorithms try to detect and suppress it” will not be settled by AI. The book looks beyond the Global North’s anxieties about democratic elections and teenage mental health to call attention to how the lack of moderation by Facebook and other platforms is inciting violence in Ethiopia, Myanmar, and other places rarely mentioned in the US.

As computer scientists, Narayanan and Kapoor frame their analysis around the technology and the flimflam associated with AI and the steps we should take to limit social harm. To understand why so many are willing to exchange so much for products that do not work and are harmful, we need a conceptual frame that explains more about human behavior.

The Ordinal Society

The Ordinal Society explains something often missing from critical accounts about internet technology and AI. In the words of a once-famous cartoon character, “We have met the enemy, and he is us.” Walt Kelly’s Pogo points out the uncomfortable fact that consumer behaviors play a major role in environmental degradation. Marion Fourcade and Kieran Healy show that the same is true of the degradation of the Internet. It is not simply the brothers of technology and greedy corporations who have made these markets in snake oil. Our own, often unexamined, desires are the foundation for the digital world we find ourselves inhabiting.

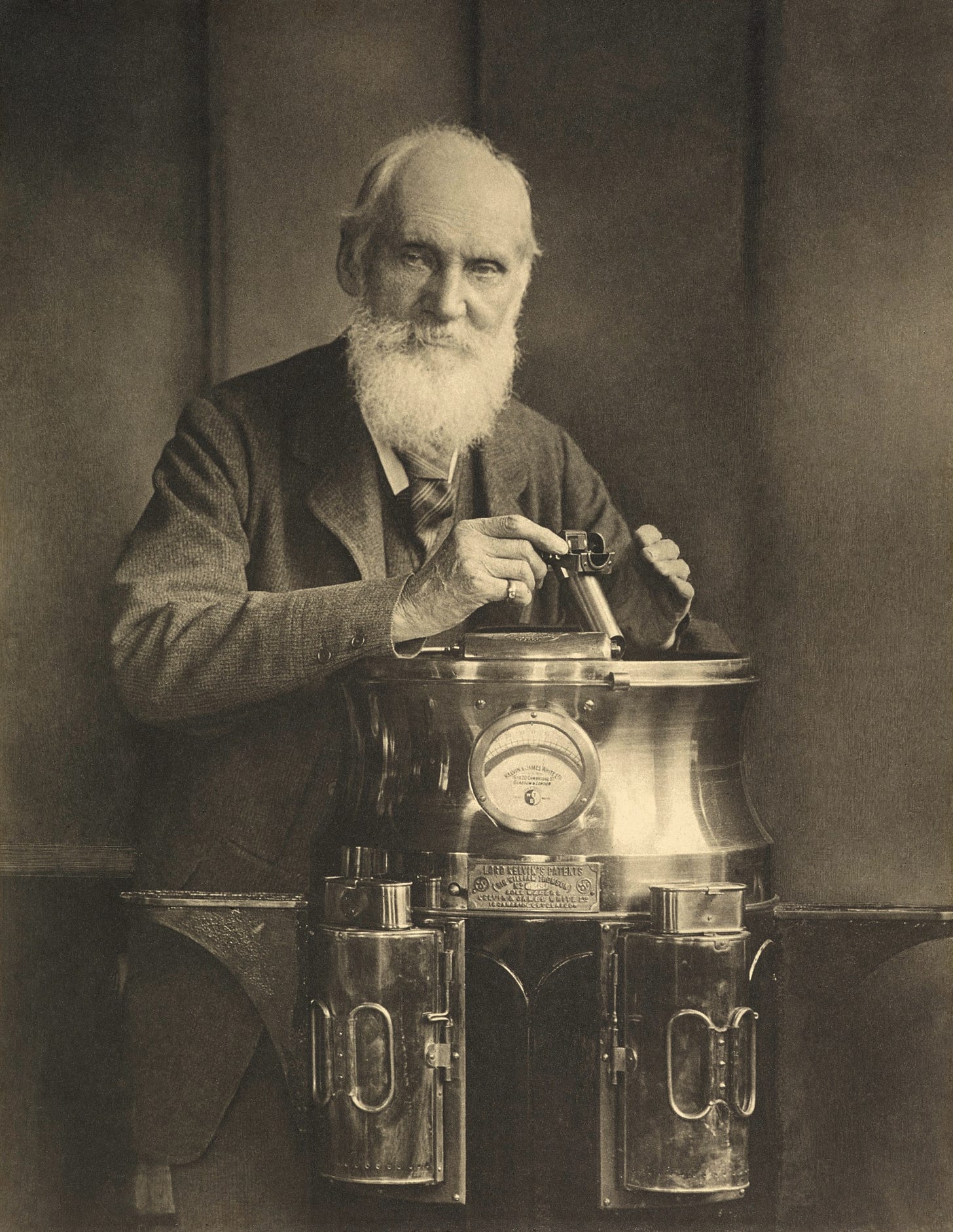

William Thomson explained an essential truth of the modern world: “When you can measure what you are speaking about, and express it in numbers, you know something about it.” Fourcade and Healy describe the social order that has emerged since this observation: “a society oriented toward, justified by, and governed through measurement." If to measure something is to know it, then to put that measure into an algorithm is to automate our knowledge of it. Such automation, as we have learned, is powerful, especially when organized and mediated through the Internet. It is even more powerful when the “it” is our own sense of self.

Fourcade and Healy argue that we have welcomed that power into our lives for what it gives us, and especially the experiences it provides. Our human desire to rank and be ranked is now realized through an internet-created social “system of organization, evaluation, and control that is remarkably convenient, often delightful, and at times frightening." It is not simply that giant corporations gather data and use it for their own purposes—though they certainly do. It is that, in so doing, they give people what they want. They tell individuals and institutions where they fit in the social order.

What Fourcade and Healy call the data imperative is an important context for understanding the new relation of AI models to data that Narayanan and Kapoor describe. Fourcade and Healy, both social scientists, are concerned about Silicon Valley’s demand for and control of data. As William S. Burroughs observed, capitalism eats quality and shits quantity. In the digital age, that means massive piles of data. The Ordinal Society, following Shoshana Zuboff, locates the origins of digital capitalism's obsession with data in Google’s realization that the skid marks of online activity could be collected and repackaged. A patent filed in 2003 shows precisely when this epiphany struck—when the technology barons began to see value in a process

in which residual user information was repurposed for targeted advertising. From then on, everybody wanted to pick up the trash—and not only their own. Companies could analyze their own confidential data. Later, they could also absorb volumes of material from the open or commodified web. Later again, they could use either or both to train customized AI models.

This data imperative, turning our digital waste into profitable commodities, has been “a cultural and political accomplishment,” as well as an economic triumph for Google and the other increasingly giant technology companies. The Ordinal Society surveys how academic social science has responded to these developments in ways that are useful for anyone who wants to understand how social theory explains digital capitalism. It offers two insights that help explain markets in AI snake oil.

The first is the danger the data imperative poses for social science. As Google and other giant tech companies complete their transition from consumer-friendly start-ups remaking the world of information for their users to extractive bureaucracies obsessively competing with one another for domination of markets in attention, they have asserted control over “the production of social-scientific knowledge by way of their domination over the data economy.” This has left social scientists outside looking in as “what previously was a free flow of data easily scraped from the web can suddenly disappear into walled gardens or behind an application programming interface (API) that is exorbitantly expensive to access.” As Narayanan and Kapoor also make clear, the theft and lack of transparency associated with training the largest AI models have made this problem worse.

The other insight is that this new world of measurement presented in algorithms has enormous appeal for individuals. Knowing our numbers—BMI, FICO, g-index, GPA, h-index, LDL, SAT—gives us a sense of who we are and a way to measure who we might become. These ordinal scales use data about our past to tell us what we desperately want to know: our future. In an uncertain world, these numbers fix us. As Fourcade and Healy put it, “Their rightness feels hard to deny. The quantities they express rest on a foundation of personal decisions, of individual behaviors, of our own carefully recorded choices. The outputs they provide seem tailored to us, and people like us.”

We compare our numbers to the average or to the scores of our classmates or neighbors. Like the bureaucracies that manage these predictive systems, individuals can use their outputs to understand their risk of dying of heart disease or getting diabetes, or their chances of getting a car loan or being admitted to or hired by an elite college. The rankings pioneered by US News serve a similar purpose for administrators of universities and hospitals.

For both individuals and institutions, the ordinal processes of digital capitalism answer the questions “Where do I fit?” and “How do I improve my position?” with numbers, models, and methods. The Ordinal Society is, so far, the most interesting analysis of the social world we have created out of our “desire to cast the world into a more rational shape in our minds.”

If I refuse

My study for their politique,

Which at the best is trick,

The angry muse

Puts confusion in my brain.

But who is he that prates

Of the culture of mankind,

Of better arts and life?

"Ode, Inscribed to William H. Channing"—Ralph Waldo Emerson

The short con: bureaucratic desires

Numbers, models, and methods are all subject to various forms of exaggeration and fraud, but marketing any product starts with desire. Sure, the “fact” that 4 out of 5 dentists recommend this toothpaste sounds good, but what I am being sold is the promise of whiter teeth. Misleading claims help sell predictive analytics, but it is the bureaucrats’ desire for their administrative machinery to work rationally and efficiently that matters. As Narayanan and Kapoor explain in AI Snake Oil:

decision-makers are people—people who dread randomness like everyone else. This means they can’t stand the thought of the alternative to this way of decision-making—that is, acknowledging that the future cannot be predicted. They would have to accept that they have no control over, say, picking good job performers, and that it’s not possible to do better than a process that is mostly random.

To bureaucrats with an IT budget during the 2010s, the promise of a machine elixir that would provide greater control over business processes was nearly irresistible. After all, the problems most managers face are related to humans in all their messy and irrational glory. In the age of big data and digital transformation, it seemed plausible that a computational model could automate the process of measuring how likely an applicant is to succeed at their job, a student is to fail their classes, or a patient is to develop sepsis during a hospital stay.

In retrospect, it is easy to say this sounds too good to be true, but the claims were “evidence-based” with nice-looking charts presented by smart people, some of whom had PhDs. The short con of predictive AI was selling bureaucrats on the idea that their messy human problems could be automated away. Tired of being forced to explain that hire, that diagnosis, that admissions decision? Just sign here, and this AI model will give you a number that will justify it. For a manager who knows his numbers, it doesn’t just feel right; it feels efficient.

As AI Snake Oil argues, the market in predictive AI snake oil emerged “out of the optimization mindset, in which one tried to formulate a decision in computational terms in order to find the optimal solution and achieve maximum efficiency. The failure of predictive AI is an indictment of this broader approach.” By 2022, bureaucrats responsible for buying technology had become an increasingly skeptical bunch, and not just about the predictive power of AI. The promise of the mid-2010s was that institutional investments in IT optimization would get you solid ROI while leveraging big data to future-proof your digital core in an agile environment. That is to say, predictive AI is but one form of high-tech snake oil.

Thanks to high-profile scams, many of them described in detail in the book, institutions became wary of predictive analytics. Add in the headlines about cryptocurrency and institutions failing to protect or misusing personal data, and the selling power of AI prediction as the answer to management’s HR problems was losing its effectiveness. The sales teams needed something new. In November of 2022, they got it.

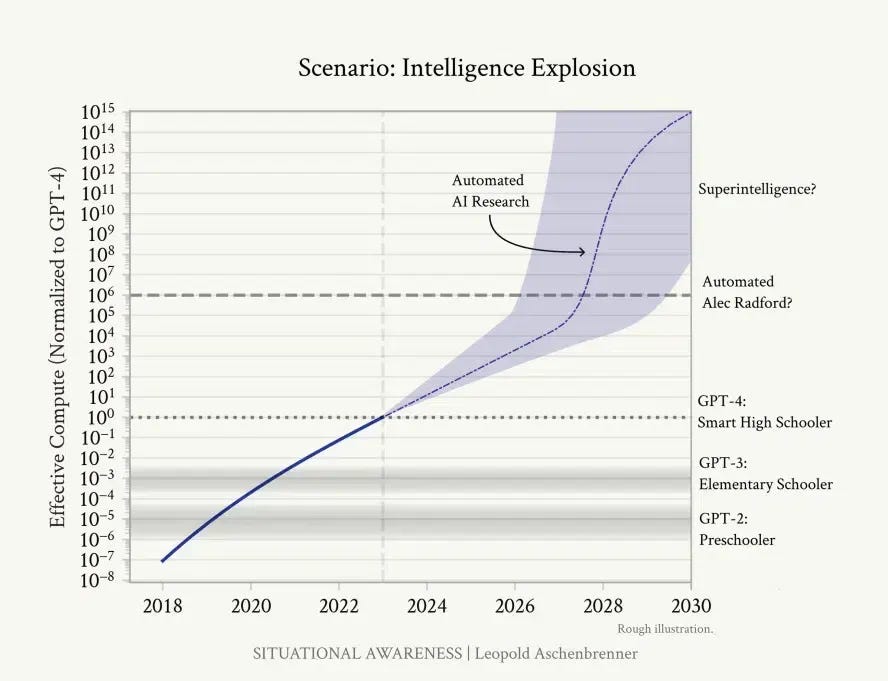

The long con: artificial general intelligence

As ChatGPT opened potential new markets for consumer technology, the AI confidence game changed dramatically. The unicorn dreams of venture capitalists are about markets where numbers go up, and oh so fast. The magic of scaling, whether expressed in terms of user growth or as the line from a better chatbot to superintelligence, attracted that most hapless and willing of marks, the tech investor.

At the center of this new confidence game of selling generative AI snake oil is the dynamo of AGI. This nebulous concept generates currents of uncertainty that flow through the ambitious chatter of technologists, investors, consumers, academics, and writers on the internet. Out of that discourse comes a shared vision of the future. Tech investors emerging from the wreckage of cryptocurrency scams see the new gold rush. Consumers see the science fiction future arriving in two flavors, utopian or dystopian. Journalists see clicks and subscriptions. Academics see new opportunities in their games of status and funding. In this last case, you can be either an enthusiast or a skeptic, but confidence about what happens next is essential to staking your claim.

Fallibilism, when it gets expressed, is weak tea in a discourse dominated by confident enthusiasts and equally confident skeptics. Here is what Narayanan and Kapoor are serving when it comes to AGI:

Currently, the evidence points strongly toward neural networks, but then again, this could be an illusion caused by a herding effect in the AI community. It could also be the case that there is not one single path to AGI and a mix of different approaches will be needed. Or maybe AGI isn’t achievable at all.

This equivocation does the snake oil sales team no good. They need something stronger tasting in their bottles than open-minded inquiry and sober analysis. The advantage of generative AI is that, like actual snake oil, there really is some value there. The temptation to bottle up whatever this is and put a label on it becomes an obligation to those living on what Nate Silver calls “the river,” a gambling mindset with deep roots in American culture. The uncertain truths of this latest advance in machine capability have created an epic opportunity for the right man, a confidence man for the twenty-first century.

The AI snake oil impresario who leads OpenAI is just such a man. From his star turn in the comedy of remarriage last year as he left and came back in one madcap weekend to his slow-motion character subversion into the villain we all wanted him to be, Sam Altman understands that a good story is the key to a complicated and lucrative scam. His prognostications, along with the outrage of his critics, serve Altman’s purpose, which is to distract the public from books like AI Snake Oil or projects like François Chollet’s ARC Prize. Dave Karpf nails it: “The business model of OpenAI isn’t actually ChatGPT as a product. It’s stories about what ChatGPT might one day become.” Alternating boardroom dramatics with scripted demos of black box breakthroughs keeps the AGI currents sparking and crackling, and the eyes on him.

If you avoid distraction, the game is not hard to figure. On one side, there are investors looking for the confidence to buy shares in the science fiction future that AGI will create. On the other, there are the consumers of the ordinal society following their desires down the lonely pathways of digital capitalism. The managers connect the two groups. Investors are also board members and benefactors who, convinced that AI is the new fire, insist that companies and institutions move faster to adopt. Executives and their bureaucrats, especially the ambitious ones, follow that direction, increasing their “spend” on AI products while citing “evidence-based” research, this time about worker productivity, with nice-looking charts presented by smart people, some of whom have PhDs. Consumers who are also workers are solemnly warned that AI may not take your job, but someone who understands AI will.

On to the stage steps the AI snake oil sales team, anxiety and hope surging through the audience. Want to be rich? Buy these stocks. Lonely? Here’s a friend. Can’t do your homework? Here’s a personalized tutor. Behind at work? Optimize your workflow. Can’t write well? Doesn’t matter anymore. Worried about your job? Enroll in this prompt engineering academy. Confused? Subscribe to this Substack.

Repressed memories of tech bubbles past drive investors to faith that this time is different, which drives the managers to believe that this time optimization through digital technology will work, which drives workers and journalists to believe that there must be something to all this AI talk, which blows hot air into rising balloons of hype that the sales team can point to and marvel. At the center of it all is uncertainty about a technology that uses a whole lot of computation and energy, along with a whole lot of collective human intelligence, to manipulate words and other forms of culture using novel and not-yet-well-understood methods.

Naryanan and Kapoor’s fundamental question remains: Does it work at all? Of course, it does. For some things. To some extent. The better questions are how does it work? and what should we do with it? AI Snake Oil and The Ordinal Society are important contributions to answering these questions.

What happens next?

I don’t know. Neither do the authors of AI Snake Oil and The Ordinal Society, although they offer interesting thoughts about what should happen. Both books seem fairly pessimistic about the potential for democratic reform, but neither offers anything more dramatic. That is a recommendation, not a complaint.

My favorite moment in The Ordinal Society is its argument against a totalizing construction of the social order they describe. Rather than “relentlessly subordinating every last shred of action and experience to a single template,” they offer that “social life tends to overflow the organizational and institutional matrix imposed on it, even when those institutions provide a powerful basis for coordination and control.” My favorite moment in AI Snake Oil is its support for using lotteries to admit students into highly selective colleges and universities, one of several suggestions offered for fixing broken institutions with something other than broken AI.

The idea that social life overflows its carefully measured prisons and the notion of randomizing the high-stakes game of college admissions are good places to start. We have no idea what effects a specific democratic reform may have on the larger system. Such attempts are not likely to succeed, but given that fallibilism and incrementalism are the best honest folks can do, that sounds a lot better than buying confidence in an AI bottle or deciding all AI models are useless.

As William James would say, we must “postpone the dogmatic answer” and instead pursue open-minded inquiry using the best methods we have. My hopes, such as they are, rest in the notion that as generative AI breaks educational things, as it forces us to confront that schools are among the most broken of institutions, there will be chances for democratic reform. That’s why the next book on my list to review is Josh Eyler’s Failing Our Future: How Grades Harm Students, and What We Can Do About It.

𝑨𝑰 𝑳𝒐𝒈 © 2024 by Rob Nelson is licensed under CC BY-SA 4.0.

Once again, with references: sitcom parodies, unexplained account suspensions, systematic discrimination in hiring, measurable deterioration in adolescent mental health, false imprisonments, and evenings lost to doom-scrolling.

The authors use the term, as I do, in the Harry Frankfurt “On Bullshit” sense.

“The Homework Machine” by Shel Silverstein is an illustrated poem about ChatGPT published in A Light in the Attic (1981).

"skid marks of online activity" 😆

Great review of two books that are now on my reading list. Thanks.

Tech bro's seem to have no problem applying fallibilism to LLMs: "We know they hallucinate and are wrong sometimes, but that's okay" but when you challenge them on their dogmatic belief that AGI is somehow merely months away they will call you a luddite and block you. Such a fragile bunch of true believers.

This is one of my favorites entries to far, Rob! I learned so much by reading how you compare these two books! AI Snake Oil has been on my list for a whole, but The Ordinal Society is new to me!